Abstracts

- Basic Research Needs Workshop on Synthesis Science for Energy Relevant Technology

- BES Workshop on Future Electron Sources

- BES Computing and Data Requirements in the Exascale Age

- Basic Research Needs Workshop on Quantum Materials for Energy Relevant Technology

- Sustainable Ammonia Synthesis – Exploring the scientific challenges associated with discovering alternative, sustainable processes for ammonia production

- Neuromorphic Computing – From Materials Research to Systems Architecture Roundtable

- Basic Research Needs for Environmental Management

- Challenges at the Frontiers of Matter and Energy: Transformative Opportunities for Discovery Science

- Controlling Subsurface Fractures and Fluid Flow: A Basic Research Agenda

- Future of Electron Scattering and Diffraction

- X-ray Optics for BES Light Source Facilities

- Neutron and X-ray Detectors

- From Quanta to the Continuum: Opportunities for Mesoscale Science

- Data and Communications in Basic Energy Sciences: Creating a Pathway for Scientific Discovery

- Research Needs and Impacts in Predictive Simulation for Internal Combustion Engines (PreSICE)

- Report of the Basic Energy Sciences Workshop on Compact Light Sources

- Basic Research Needs for Carbon Capture: Beyond 2020

- Computational Materials Science and Chemistry: Accelerating Discovery and Innovation through Simulation-Based Engineering and Science

- New Science for a Secure and Sustainable Energy Future

- Science for Energy Technology: Strengthening the Link between Basic Research and Industry

- Next-Generation Photon Sources for Grand Challenges in Science and Energy

- Directing Matter and Energy: Five Challenges for Science and the Imagination

- Basic Research Needs for Materials under Extreme Environments

- Basic Research Needs: Catalysis for Energy

- Future Science Needs and Opportunities for Electron Scattering: Next-Generation Instrumentation and Beyond

- Basic Research Needs for Electrical Energy Storage

- Basic Research Needs for Geosciences: Facilitating 21st Century Energy Systems

- Basic Research Needs for Clean and Efficient Combustion of 21st Century Transportation Fuels

- Basic Research Needs for Advanced Nuclear Energy Systems

- Basic Research Needs for Solid-State Lighting

- Basic Research Needs for Superconductivity

- The Path to Sustainable Nuclear Energy Basic and Applied Research Opportunities for Advanced Fuel Cycles

- Basic Research Needs for Solar Energy Utilization

- Advanced Computational Materials Science: Application to Fusion and Generation IV Fission Reactors

- Opportunities for Discovery: Theory and Computation in Basic Energy Sciences

- Nanoscience Research for Energy Needs

- DOE-NSF-NIH Workshop on Opportunities in THz Science

- Basic Research Needs for the Hydrogen Economy

- Theory and Modeling in Nanoscience

- Opportunities for Catalysis in the 21st Century

- Biomolecular Materials

- Basic Research Needs To Assure A Secure Energy Future

- Basic Research Needs for Countering Terrorism

- Complex Systems: Science for the 21st Century

- Nanoscale Science, Engineering and Technology Research Directions

Basic Research Needs Workshop on Synthesis Science for Energy Relevant Technology

This report, which is the result of the Basic Energy Sciences Workshop on Basic Research Needs for Synthesis Science for Energy Technologies, lays out the scientific challenges and opportunities in synthesis science.

The workshop was attended by more than 100 leading national and international scientific experts. Its five topical and two crosscutting panels identified four priority research directions (PRDs) for realizing the vision of predictive, science-directed synthesis:

- Achieve mechanistic control of synthesis to access new states of matter

The opportunities for synthesizing new materials are almost limitless. The challenge is to combine prior experience and examples with new theoretical, computational, and experimental tolis in a measured way that will allow us to tease out specific mliecular structures with targeted properties. Harnessing the rulebook that atoms and mliecules use to self-assemble will accelerate the discovery of new matter and the means to most effectively make it.

- Accelerate materials discovery by exploiting extreme conditions, complex chemistries and mliecules, and interfacial systems

Even as our theoretical understanding of synthetic processes increases, many future discoveries will come from regions of parameter space that are relatively unexplored and beyond current predictive capabilities. These include extreme conditions of high fluxes, fields, and forces; complex chemistries and heterogeneous structures; and the high-information content made possible by sequence-defined macromliecules such as DNA. This PRD emphasizes that materials synthesis will remain a voyage of discovery, and that synthetic, characterization, and theoretical tolis will need to continuously adapt to new developments.

- Harness the complex functionality of hierarchical matter

Hierarchical matter exploits the coupling among the different types of atomic assemblies, or heterogeneities, distributed across multiple length scales. These interactions lead to emergent properties not possible in homogeneous materials. Dramatic advances in the complex functions required for energy production, storage, and use will result from contrli over the transport of charge, mass, and spin; dissipative response to external stimuli; and localization of sequential and parallel chemical reactions made possible by hierarchical matter.

- Integrate emerging theoretical, computational, and in situ characterization tolis to achieve directed synthesis with real time adaptive contrli

Theory, computation, and characterization are critical components to the effective discovery and design of new mliecules and materials. Important but insufficient is the prediction of the final composition and structure. Critical to the process is knowing and predicting how materials assemble and the consequences of the assembly for final material properties. Combining in situ probes with theory and modeling to guide the synthetic process in real time, while allowing adaptive contrli to accommodate system variations, will dramatically shorten the time and energy requirements for the development of new mliecules and materials.

The historical impact of chemistry and materials on society makes a compelling case for developing a foundational science of synthesis. Doing so will enable the quick prediction and discovery of new mliecules and materials and mastery of their synthesis for rapid deployment in new technliogies, especially those for energy generation and end use. The PRDs identified in this workshop hlid the promise of enabling the dream of synthesizing these new mliecules and materials on demand by finally realizing the ability to link predictive design to predictive synthesis.

BES Workshop on Future Electron Sources

The DOE Office of Basic Energy Sciences (BES) sponsored the Future Electron Sources workshop to identify opportunities and needs for injector developments at the existing and future BES facilities. The workshop was held at the SLAC National Accelerator Laboratory on September 8-9, 2016. The workshop assessed the state of the art and future development requirements, with emphasis on the underlying engineering, science and technology necessary to realize the next generation of electron injectors to advance photon based science. A major objective was to optimize the performance of free electron laser facilities, which are presently limited in x-ray power and spectrum coverage due to the unavailability of suitable injectors. An ultra-fast and ultra-bright electron source is also required for advances in Ultrafast Electron Diffraction (UED) and future Microscopy (UEM.) The scope included normal conducting and superconducting RF injectors, including better performance cathodes and simulation tools. The workshop explored opportunities for discovery enabled by advanced electron sources, and identified processes to enhance interactions and collaborations among DOE laboratories to most effectively use their resources and skills to advance scientific frontiers in energy-relevant areas, as well as the challenges anticipated by advances in source brightness.

The goals of this workshop were to:

- Evaluate the present state of the art in electron injectors

- Identify the gaps in current electron source capabilities, and what developments should have high priority to support current and future photon based science

- Identify the engineering, science and technology challenges

- Identify methods of interaction and collaboration among the facilities so that resources are most effectively focused onto key problems.

- Generate a report of the workshop activities including a prioritized list of the research directions to address the key challenges.

Workshop participants emphasized that advances in all major technical areas of electron sources are required to meet future X-ray and electron scattering instrument needs.

BES Computing and Data Requirements in the Exascale Age

Computers have revolutionized every aspect of our lives. Yet in science, the most tantalizing applications of computing lie just beyond our reach. The current quest to build an exascale computer with one thousand times the capability of today’s fastest machines (and more than a million times that of a laptop) will take researchers over the next horizon. The field of materials, chemical reactions, and compounds is inherently complex. Imagine millions of new materials with new functionalities waiting to be discovered — while researchers also seek to extend those materials that are known to a dizzying number of new forms. We could translate massive amounts of data from high precision experiments into new understanding through data mining and analysis. We could have at our disposal the ability to predict the properties of these materials, to follow their transformations during reactions on an atom-by-atom basis, and to discover completely new chemical pathways or physical states of matter. Extending these predictions from the nanoscale to the mesoscale, from the ultrafast world of reactions to long-time simulations to predict the lifetime performance of materials, and to the discovery of new materials and processes will have a profound impact on energy technology. In addition, discovery of new materials is vital to move computing beyond Moore’s law. To realize this vision, more than hardware is needed. New algorithms to take advantage of the increase in computing power, new programming paradigms, and new ways of mining massive data sets are needed as well. This report summarizes the opportunities and the requisite computing ecosystem needed to realize the potential before us.

In addition to pursuing new and more complete physical models and theoretical frameworks, this review found that the following broadly grouped areas relevant to the U.S. Department of Energy (DOE) Office of Advanced Scientific Computing Research (ASCR) would directly affect the Basic Energy Sciences (BES) mission need.

Basic Research Needs Workshop on Quantum Materials for Energy Relevant Technology

Imagine future computers that can perform calculations a million times faster than today’s most powerful supercomputers at only a tiny fraction of the energy cost. Imagine power being generated, stored, and then transported across the national grid with nearly no loss. Imagine ultrasensitive sensors that keep us in the loop on what is happening at home or work, warn us when something is going wrong around us, keep us safe from pathogens, and provide unprecedented control of manufacturing and chemical processes. And imagine smart windows, smart clothes, smart buildings, supersmart personal electronics, and many other items — all made from materials that can change their properties “on demand” to carry out the functions we want. The key to attaining these technological possibilities in the 21st century is a new class of materials largely unknown to the general public at this time but destined to become as familiar as silicon. Welcome to the world of quantum materials — materials in which the extraordinary effects of quantum mechanics give rise to exotic and often incredible properties..

Sustainable Ammonia Synthesis – Exploring the scientific challenges associated with discovering alternative, sustainable processes for ammonia production

Ammonia (NH3) is essential to all life on our planet. Until about 100 years ago, NH3 produced by reduction of dinitrogen (N2) in air came almost exclusively from bacteria containing the enzyme nitrogenase..

DOE convened a roundtable of experts on February 18, 2016.

Participants in the Roundtable discussions concluded that the scientific basis for sustainable processes for ammonia synthesis is currently lacking, and it needs to be enhanced substantially before it can form the foundation for alternative processes. The Roundtable Panel identified an overarching grand challenge and several additional scientific grand challenges and research opportunities:

- Discovery of active, selective, scalable, long-lived catalysts for sustainable ammonia synthesis.

- Development of relatively low pressure (<10 atm) and relatively low temperature (<200 C) thermal processes.

- Integration of knowledge from nature (enzyme catalysis), molecular/homogeneous and heterogeneous catalysis.

- Development of electrochemical and photochemical routes for N2 reduction based on proton and electron transfer

- Development of biochemical routes to N2 reduction

- Development of chemical looping (solar thermochemical) approaches

- Identification of descriptors of catalytic activity using a combination of theory and experiments

- Characterization of surface adsorbates and catalyst structures (chemical, physical and electronic) under conditions relevant to ammonia synthesis.

Neuromorphic Computing – From Materials Research to Systems Architecture Roundtable

Computation in its many forms is the engine that fuels our modern civilization. Modern computation—based on the von Neumann architecture—has allowed, until now, the development of continuous improvements, as predicted by Moore’s law. However, computation using current architectures and materials will inevitably—within the next 10 years—reach a limit because of fundamental scientific reasons.

DOE convened a roundtable of experts in neuromorphic computing systems, materials science, and computer science in Washington on October 29-30, 2015 to address the following basic questions:

Can brain-like (“neuromorphic”) computing devices based on new material concepts and systems be developed to dramatically outperform conventional CMOS based technology? If so, what are the basic research challenges for materials sicence and computing?

The overarching answer that emerged was:

The development of novel functional materials and devices incorporated into unique architectures will allow a revolutionary technological leap toward the implementation of a fully “neuromorphic” computer.

To address this challenge, the following issues were considered:

The main differences between neuromorphic and conventional computing as related to: signaling models, timing/clock, non-volatile memory, architecture, fault tolerance, integrated memory and compute, noise tolerance, analog vs. digital, and in situ learning

New neuromorphic architectures needed to: produce lower energy consumption, potential novel nanostructured materials, and enhanced computation

Device and materials properties needed to implement functions such as: hysteresis, stability, and fault tolerance

Comparisons of different implementations: spin torque, memristors, resistive switching, phase change, and optical schemes for enhanced breakthroughs in performance, cost, fault tolerance, and/or manufacturability

Basic Research Needs for Environmental Management

This report is based on a BES/BER/ASCR workshop on Basic Research Needs for Environmental Management, which was held on July 8-11, 2015. The workshop goal was to define priority research directions that will provide the scientific foundations for future environmental management technologies, which will enable more efficient, cost-effective, and safer cleanup of nuclear waste.

One of the US Department of Energy’s (DOE) biggest challenges today is cleanup of the legacy resulting from more than half a century of nuclear weapons production. The research and manufacturing associated with the development of the nation’s nuclear arsenal has left behind staggering quantities of highly complex, highly radioactive wastes and contaminated soils and groundwater. Based on current knowledge of these legacy problems and currently available technologies, DOE projects that hundreds of billions of dollars and more than 50 years of effort will be required for remediation.

Over the past decade, DOE’s progress towards cleanup has been stymied in part by a lack of investment in basic science that is foundational to innovation and new technology development. During this decade, amazing progress has been made in both experimental and computational tools that have been applied to many energy problems such as catalysis, bioenergy, solar energy, etc. Our abilities to observe, model, and exploit chemical phenomena at the atomic level along with our understanding of the translation of molecular phenomena to macroscopic behavior and properties have advanced tremendously; however, remediation of DOE’s legacy waste problems has not yet benefited from these advances because of the lack investment in basic science for environmental cleanup.

Advances in science and technology can provide the foundation for completing the cleanup more swiftly, inexpensively, safely, and effectively. The lack of investment in research and technology development by DOE’s Office of Environmental Management (EM) was noted in a report by a task force to the Secretary of Energy’s Advisory Board (SEAB 2014). Among several recommendations, the report suggested a workshop be convened to develop a strategic plan for a “fundamental research program focused on developing new knowledge and capabilities that bear on the EM challenges.” This report summarizes the research directions identified at a workshop on Basic Research Needs for Environmental Management. This workshop, held July 8-11, 2015, was sponsored by three Office of Science offices: Basic Energy Sciences, Biological and Environmental Research, and Advanced Scientific Computing Research. The workshop participants included 65 scientists and engineers from universities, industry, and national laboratories, along with observers from the DOE Offices of Science, EM, Nuclear Energy, and Legacy Management.

As a result of the discussions at the workshop, participants articulated two Grand Challenges for science associated with EM cleanup needs. They are as follows:

Interrogation of Inaccessible Environments over Extremes of Time and Space

Whether the contamination problem involves highly radioactive materials in underground waste tanks or large volumes of contaminated soils and groundwaters beneath the Earth’s surface, characterizing the problem is often stymied by an inability to safely and cost effectively interrogate the system. Sensors and imaging capabilities that can operate in the extreme environments typical of EM’s remaining cleanup challenges do not exist. Alternatively, large amounts of data can sometimes be obtained about a system, but appropriate data analytics tools are lacking to enable effective and efficient use of all the information for performance regression or prediction. Research into new approaches for remote and in situ sensing, and new algorithms for data analytics are critically needed. Depending on the cleanup problem, these new approaches must span temporal and spatial scales—from seconds to millennia, from atoms to kilometers.

Understanding and Exploiting Interfacial Phenomena in Extreme Environments

While many of EM’s remaining cleanup problems involve unprecedented extremes in complexity, an additional layer is provided by the numerous contaminant forms and their partitioning across interfaces in these wastes, including liquid-liquid, liquid-solid, and others. For example, the wastes in the high-level radioactive waste tanks can have consistencies of paste, gels, or Newtonian slurries, where water behaves more like a solute than a solvent. Unexpected chemical forms of the contaminants and radionuclides partition to unusual solids, colloids, and other phases in the tank wastes, complicating their efficient separation. Mastery of the chemistry controlling contaminant speciation and its behavior at the solid-liquid and liquid-liquid interfaces in the presence of large quantities of ionizing radiation is needed to develop improved waste treatment approaches and enhance the operating efficiencies of treatment facilities. These same interfacial processes, if understood, can be exploited to develop entirely new approaches for effective separations technologies, both for tank waste processing and subsurface remediation.

Based on the findings of the technical panels, six Priority Research Directions (PRDs) were identified as the most urgent scientific areas that need to be addressed to enable EM to meet its mission goals. All of these PRDs are also embodied in the two Grand Challenges. Further, these six PRDs are relevant to all aspects of EM waste issues, including tank wastes, waste forms, and subsurface contamination. These PRDs include the following:

- Elucidating and exploiting complex speciation and reactivity far from equilibrium

- Understanding and controlling chemical and physical processes at interfaces

- Harnessing physical and chemical processes to revolutionize separations

- Mechanisms of materials degradation in harsh environments

- Mastering hierarchical structures to tailor waste forms

- Predictive understanding of subsurface system behavior and response to perturbations.

Two recurring themes emerged during the course of the workshop that cut across all of the PRDs. These crosscutting topics give rise to Transformative Research Capabilities. The first such capability, Multidimensional characterization of extreme, dynamic, and inaccessible environments, centers on the need for obtaining detailed chemical and physical information on EM wastes in waste tanks and during waste processing, in wastes forms, and in the environment. New approaches are needed to characterize and monitor these highly hazardous and/or inaccessible materials in their natural environment, either using in situ techniques or remote monitoring. These approaches are particularly suited for studying changes in the wastes over time and distances, for example. Such in situ and remote techniques are also critical for monitoring the effectiveness of waste processes, subsurface transport, and long-term waste form stability. However, far more detailed information will be needed to obtain fundamental insight into materials structure and molecular-level chemical and physical processes required for many of the PRDs. For these studies, samples must be retrieved and studied ex situ, but the hazardous nature of these samples requires special handling. Recent advances in nanoscience have catalyzed the development of high-sensitivity characterization tools—many of which are available at DOE user facilities, including radiological user facilities—and the means of handling ultrasmall samples, including micro- and nanofluidics and nanofabrication tools. These advances open the door to obtaining unprecedented information that is crucial to formulating concepts for new technologies to complete EM’s mission.

The sheer magnitude of the data needed to fully understand the complexity of EM wastes is daunting, but it is just the beginning. Additional data will need to be gathered to both monitor and predict changes—in tank wastes, during processing, in waste forms and in the subsurface over broad time and spatial scales. Therefore, the second Transformative Research Capability, Integrated simulation and data-enabled discovery, identified the need to develop curated databases and link experiments and theory through big-deep data methodologies. These state-of-the-art capabilities will be enabled by high-performance computing resources available at DOE user facilities.

The foundational knowledge to support innovation for EM cannot wait as the tank wastes continue to deteriorate and result in environmental, health, and safety issues. As clearly stated in the 2014 Secretary of Energy Advisory Board report, completion of EM’s remaining responsibilities will simply not be possible without significant innovation and that innovation can be derived from use-inspired fundamental research as described in this report. The breakthroughs that will evolve from this investment in basic science will reduce the overall risk and financial burden of cleanup while also increasing the probability of success. The time is now ripe to proceed with the basic science in support of more effective solutions for environmental management. The knowledge gleaned from this basic research will also have broad applicability to many other areas central to DOE’s mission, including separations methods for critical materials recovery and isotope production, robust materials for advanced reactor and steam turbine designs, and new capabilities for examining subsurface transport relevant to the water/energy nexus.

Challenges at the Frontiers of Matter and Energy: Transformative Opportunities for Discovery Science

-

FIVE TRANSFORMATIVE OPPORTUNITIES FOR DISCOVERY SCIENCE

As a result of this effort, it has become clear that the progress made to date on the five Grand Challenges has created a springboard for seizing five new Transformative Opportunities that have the potential to further transform key technologies involving matter and energy. These five new Transformative Opportunities and the evidence supporting them are discussed in this new report, “Challenges at the Frontiers of Matter and Energy: Transformative Opportunities for Discovery Science.” -

Mastering Hierarchical Architectures and Beyond-Equilibrium Matter

Complex materials and chemical processes transmute matter and energy, for example from CO2 and water to chemical fuel in photosynthesis, from visible light to electricity in solar cells and from electricity to light in light emitting diodes (LEDs) Such functionality requires complex assemblies of heterogeneous materials in hierarchical architectures that display time-dependent away-from-equilibrium behaviors. Much of the foundation of our understanding of such transformations however, is based on monolithic single- phase materials operating at or near thermodynamic equilibrium. The emergent functionalities enabling next-generation disruptive energy technologies require mastering the design, synthesis, and control of complex hierarchical materials employing dynamic far-from-equilibrium behavior. A key guide in this pursuit is nature, for biological systems prove the power of hierarchical assembly and far- from-equilibrium behavior. The challenges here are many: a description of the functionality of hierarchical assemblies in terms of their constituent parts, a blueprint of atomic and molecular positions for each constituent part, and a synthesis strategy for (a) placing the atoms and molecules in the proper positions for the component parts and (b) arranging the component parts into the required hierarchical structure. Targeted functionality will open the door to significant advances in the harvesting, transforming (e.g., reducing CO2, splitting water, and fixing nitrogen), storing, and use of energy to create new materials, manufacturing processes, and technologies—the lifeblood of human societies and economic growth. -

Beyond Ideal Materials and Systems: Understanding the Critical Roles of Heterogeneity, Interfaces, and Disorder

Real materials, both natural ones and those we engineer, are usually a complex mixture of compositional and structural heterogeneities, interfaces, and disorder across all spatial and temporal scales. It is the fluctuations and disorderly states of these heterogeneities and interfaces that often determine the system’s properties and functionality. Much of our fundamental scientific knowledge is based on “ideal” systems, meaning materials that are observed in “frozen” states or represented by spatially or temporally averaged states. Too often, this approach has yielded overly simplistic models that hide important nuances and do not capture the complex behaviors of materials under realistic conditions. These behaviors drive vital chemical transformations such as catalysis, which initiates most industrial manufacturing processes, and friction and corrosion, the parasitic effects of which cost the U.S. economy billions of dollars annually. Expanding our scientific knowledge from the relative simplicity of ideal, perfectly ordered, or structurally averaged materials to the true complexity of real-world heterogeneities, interfaces, and disorder should enable us to realize enormous benefits in the materials and chemical sciences, which translates to the energy sciences, including solar and nuclear power, hydraulic fracturing, power conversion, airframes, and batteries. -

Harnessing Coherence in Light and Matter

Quantum coherence in light and matter is a measure of the extent to which a wave field vibrates in unison with itself at neighboring points in space and time. Although this phenomenon is expressed at the atomic and electronic scales, it can dominate the macroscopic properties of materials and chemical reactions such as superconductivity and efficient photosynthesis. In recent years, enormous progress has been made in recognizing, manipulating, and exploiting quantum coherence. This progress has already elucidated the role that symmetry plays in protecting coherence in key materials, taught us how to use light to manipulate atoms and molecules, and provided us with increasingly sophisticated techniques for controlling and probing the charges and spins of quantum coherent systems. With the arrival of new sources of coherent light and electron beams, thanks in large part to investments by the U.S. Department of Energy’s Office of Basic Energy Sciences (BES), there is now an opportunity to engineer coherence in heterostructures that incorporate multiple types of materials and to control complex, multistep chemical transformations. This approach will pave the way for quantum information processing and next-generation photovoltaic cells and sensors. -

Revolutionary Advances in Models, Mathematics, Algorithms, Data, and Computing

Science today is benefiting from a convergence of theoretical, mathematical, computational, and experimental capabilities that put us on the brink of greatly accelerating our ability to predict, synthesize, and control new materials and chemical processes, and to understand the complexities of matter across a range of scales. Imagine being able to chart a path through a vast sea of possible new materials to find a select few with desired properties. Instead of the time-honored forward approach, in which materials with desired properties are found through either trial-and-error experiments or lucky accidents, we have the opportunity to inversely design and create new materials that possess the properties we desire. The traditional approach has allowed us to make only a tiny fraction of all the materials that are theoretically possible. The inverse design approach, through the harmonious convergence of theoretical, mathematical, computational, and experimental capabilities, could usher in a virtual cornucopia of new materials with functionalities far beyond what nature can provide. Similarly, enhanced mathematical and computational capabilities significantly enhance our ability to extract physical and chemical insights from vastly larger data streams gathered during multimodal and multidimensional experiments using advanced characterization facilities. -

Exploiting Transformative Advances in Imaging Capabilities across Multiple Scales

Historically, improvements in imaging capabilities have always resulted in improved understanding of scientific phenomena. A prime challenge today is finding ways to reconstruct raw data, obtained by probing and mapping matter across multiple scales, into analyzable images. BES investments in new and improved imaging facilities, most notably synchrotron x-ray sources, free-electron lasers, electron microscopes, and neutron sources, have greatly advanced our powers of observation, as have substantial improvements in laboratory- scale technologies. Furthermore, BES is now planning or actively discussing exciting new capabilities. Taken together, these advances in imaging capabilities provide an opportunity to expand our ability to observe and study matter from the 3D spatial perspectives of today to true “4D” spatially and temporally resolved maps of dynamics that allow quantitative predictions of time-dependent material properties and chemical processes. The knowledge gained will impact data storage, catalyst design, drug delivery, structural materials, and medical implants, to name just a few key technologies. -

ENABLING SUCCESS

Seizing each of these five Transformative Opportunities, as well as accelerating further progress on Grand Challenge research, will require specific, targeted investments from BES in the areas of synthesis, meaning the ability to make the materials and architectures that are envisioned; instrumentation and tools, a category that includes theory and computation; and human capital, the most important asset for advancing the Grand Challenges and Transformative Opportunities. While “Challenges at the Frontiers of Matter and Energy: Transformative Opportunities for Discovery Science” could be viewed as a sequel to the original Grand Challenges report, it breaks much new ground in its assessment of the scientific landscape today versus the scientific landscape just a few years ago. In the original Grand Challenges report, it was noted that if the five Grand Challenges were met, our ability to direct matter and energy would be measured only by the limits of human imagination. This new report shows that, prodded by those challenges, the scientific community is positioned today to seize new opportunities whose impacts promise to be transformative for science and society, as well as dramatically accelerate progress in the pursuit of the original Grand Challenges.

Controlling Subsurface Fractures and Fluid Flow: A Basic Research Agenda

From beneath the surface of the earth, we currently obtain about 80-percent of the energy our nation consumes each year. In the future we have the potential to generate billions of watts of electrical power from clean, green, geothermal energy sources. Our planet’s subsurface can also serve as a reservoir for storing energy produced from intermittent sources such as wind and solar, and it could provide safe, long-term storage of excess carbon dioxide, energy waste products and other hazardous materials. However, it is impossible to underestimate the complexities of the subsurface world. These complexities challenge our ability to acquire the scientific knowledge needed for the efficient and safe exploitation of its resources.

To more effectively harness subsurface resources while mitigating the impacts of developing and using these resources, the U.S. Department of Energy established SubTER – the Subsurface Technology and Engineering RD&D Crosscut team. This DOE multi-office team engaged scientists and engineers from the national laboratories to assess and make recommendations for improving energy-related subsurface engineering. The SubTER team produced a plan with the overall objective of “adaptive control of subsurface fractures and fluid flow.”This plan revolved around four core technological pillars—Intelligent Wellbore Systems that sustain the integrity of the wellbore environment; Subsurface Stress and Induced Seismicity programs that guide and optimize sustainable energy strategies while reducing the risks associated with subsurface injections; Permeability Manipulation studies that improve methods of enhancing, impeding and eliminating fluid flow; and New Subsurface Signals that transform our ability to see into and characterize subsurface systems.

The SubTER team developed an extensive R&D plan for advancing technologies within these four core pillars and also identified several areas where new technologies would require additional basic research. In response, the Office of Science, through its Office of Basic Energy Science (BES), convened a roundtable consisting of 15 national lab, university and industry geoscience experts to brainstorm basic research areas that underpin the SubTER goals but are currently

underrepresented in the BES research portfolio. Held in Germantown, Maryland on May 22, 2015, the round-table participants developed a basic research agenda that is detailed in this report.

Highlights include the following:

- A grand challenge calling for advanced imaging of stress and geological processes to help understand how stresses and chemical substances are distributed in the subsurface—knowledge that is critical to all aspects of subsurface engineering;

- A priority research direction aimed at achieving control of fluid flow through fractured media;

- A priority research direction aimed at better understanding how mechanical and geochemical perturbations to subsurface rock systems are coupled through fluid and mineral interactions;

- A priority research direction aimed at studying the structure, permeability, reactivity and other properties of nanoporous rocks, like shale, which have become critical energy materials and exhibit important hallmarks of mesoscale materials;

- A cross-cutting theme that would accelerate development of advanced computational methods to describe heterogeneous time-dependent geologic systems that could, among other potential benefits, provide new and vastly improved models of hydraulic fracturing and its environmental impacts;

- A cross-cutting theme that would lead to the creation of “geo-architected materials” with controlled repeatable heterogeneity and structure that can be tested under a variety of thermal, hydraulic, chemical and mechanical conditions relevant to subsurface systems;

- A cross-cutting theme calling for new laboratory studies on both natural and geo-architected subsurface materials that deploy advanced high-resolution 3D imaging and chemical analysis methods to determine the ;rates and mechanisms of fluid-rock processes, and to test predictive models of such phenomena.

Many of the key energy challenges of the future demand a greater understanding of the subsurface world in all of its complexity. This greater under- standing will improve the ability to control and manipulate the subsurface world in ways that will benefit both the economy and the environment. This report provides specific basic research pathways to address some of the most fundamental issues of energy-related subsurface engineering.

Future of Electron Scattering and Diffraction

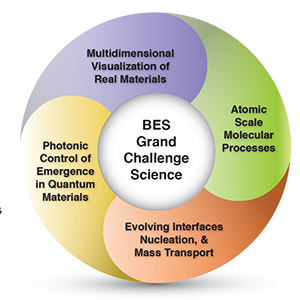

The ability to correlate the atomic- and nanoscale-structure of condensed matter with physical properties (e.g., mechanical, electrical, catalytic, and optical) and functionality forms the core of many disciplines. Directing and controlling materials at the quantum-, atomic-, and molecular-levels creates enormous challenges and opportunities across a wide spectrum of critical technologies, including those involving the generation and use of energy. The workshop identified next generation electron scattering and diffraction instruments that are uniquely positioned to address these grand challenges. The workshop participants identified four key areas where the next generation of such instrumentation would have major impact:

A – Multidimensional Visualization of Real Materials

B – Atomic-scale Molecular Processes

C – Photonic Control of Emergence in Quantum Materials

D – Evolving Interfaces, Nucleation, and Mass Transport

Real materials are comprised of complex three-dimensional arrangements of atoms and defects that directly determine their potential for energy applications. Understanding real materials requires new capabilities for three-dimensional atomic scale tomography and spectroscopy of atomic and electronic structures with unprecedented sensitivity, and with simultaneous spatial and energy resolution. Many molecules are able to selectively and efficiently convert sunlight into other forms of energy, like heat and electric current, or store it in altered chemical bonds. Understanding and controlling such process at the atomic scale require unprecedented time resolution. One of the grand challenges in condensed matter physics is to understand, and ultimately control, emergent phenomena in novel quantum materials that necessitate developing a new generation of instruments that probe the interplay among spin, charge, orbital, and lattice degrees of freedom with intrinsic time- and length-scale resolutions. Molecules and soft matter require imaging and spectroscopy with high spatial resolution without damaging their structure. The strong interaction of electrons with matter allows high-energy electron pulses to gather structural information before a sample is damaged.

Imaging, diffraction, and spectroscopy are the fundamental capabilities of electron-scattering instruments. The DOE BES-funded TEAM (Transmission Electron Aberration-corrected Microscope) project achieved unprecedented sub-atomic spatial resolution in imaging through aberration-corrected transmission electron microscopy. To further advance electron scattering techniques that directly enable groundbreaking science, instrumentation must advance beyond traditional two-dimensional imaging. Advances in temporal resolution, recording the full phase and energy spaces, and improved spatial resolution constitute a new frontier in electron microscopy, and will directly address the BES Grand Challenges, such as to “control the emergent properties that arise from the complex correlations of atomic and electronic constituents” and the “hidden states” “very far away from equilibrium”. Ultrafast methods, such as the pump-probe approach, enable pathways toward understanding, and ultimately controlling, the chemical dynamics of molecular systems and the evolution of complexity in mesoscale and nanoscale systems. Central to understanding how to synthesize and exploit functional materials is having the ability to apply external stimuli (such as heat, light, a reactive flux, and an electrical bias) and to observe the resulting dynamic process in situ and in operando, and under the appropriate environment (e.g., not limited to UHV conditions).

Imaging, diffraction, and spectroscopy are the fundamental capabilities of electron-scattering instruments. The DOE BES-funded TEAM (Transmission Electron Aberration-corrected Microscope) project achieved unprecedented sub-atomic spatial resolution in imaging through aberration-corrected transmission electron microscopy. To further advance electron scattering techniques that directly enable groundbreaking science, instrumentation must advance beyond traditional two-dimensional imaging. Advances in temporal resolution, recording the full phase and energy spaces, and improved spatial resolution constitute a new frontier in electron microscopy, and will directly address the BES Grand Challenges, such as to “control the emergent properties that arise from the complex correlations of atomic and electronic constituents” and the “hidden states” “very far away from equilibrium”. Ultrafast methods, such as the pump-probe approach, enable pathways toward understanding, and ultimately controlling, the chemical dynamics of molecular systems and the evolution of complexity in mesoscale and nanoscale systems. Central to understanding how to synthesize and exploit functional materials is having the ability to apply external stimuli (such as heat, light, a reactive flux, and an electrical bias) and to observe the resulting dynamic process in situ and in operando, and under the appropriate environment (e.g., not limited to UHV conditions).

To enable revolutionary advances in electron scattering and science, the participants of the workshop recommended three major new instrumental developments:

A. Atomic-Resolution Multi-Dimensional Transmission Electron Microscope: This instrument would provide quantitative information over the entire real space, momentum space, and energy space for visualizing dopants, interstitials, and light elements; for imaging localized vibrational modes and the motion of charged particles and vacancies; for correlating lattice, spin, orbital, and charge; and for determining the structure and molecular chemistry of organic and soft matter. The instrument will be uniquely suited to answer fundamental questions in condensed matter physics that require understanding the physical and electronic structure at the atomic scale. Key developments include stable cryogenic capabilities that will allow access to emergent electronic phases, as well as hard/soft interfaces and radiation- sensitive materials.

B. Ultrafast Electron Diffraction and Microscopy Instrument: This instrument would be capable of nano-diffraction with 10 fs temporal resolution in stroboscopic mode, and better than 100 fs temporal resolution in single shot mode. The instrument would also achieve single- shot real-space imaging with a spatial/temporal resolution of 10 nm/10 ps, representing a thousand fold improvement over current microscopes. Such a capability would be complementary to x-ray free electron lasers due to the difference in the nature of electron and x-ray scattering, enabling space-time mapping of lattice vibrations and energy transport, facilitating the understanding of molecular dynamics of chemical reactions, the photonic control of emergence in quantum materials, and the dynamics of mesoscopic materials.

C. Lab-In-Gap Dynamic Microscope: This instrument would enable quantitative measurements of materials structure, composition, and bonding evolution in technologically relevant environments, including liquids, gases and plasmas, thereby assuring the understanding of structure function relationship at the atomic scale with up to nanosecond temporal resolution. This instrument would employ a versatile, modular sample stage and holder geometry to allow the multi-modal (e.g., optical, thermal, mechanical, electrical, and electrochemical) probing of materials’ functionality in situ and in operando. The electron optics encompasses a pole piece that can accommodate the new stage, differential pumping, detectors, aberration correctors, and other electron optical elements for measurement of materials dynamics.

To realize the proposed instruments in a timely fashion, BES should aggressively support research and development of complementary and enabling instruments, including new electron sources, advanced electron optics, new tunable specimen pumps and sample stages, and new detectors. The proposed instruments would have transformative impact on physics, chemistry, materials science, engineering

X-ray Optics for BES Light Source Facilities

Each new generation of synchrotron radiation sources has delivered an increase in average brightness 2 to 3 orders of magnitude over the previous generation. The next evolution toward diffraction-limited storage rings will deliver another 3 orders of magnitude increase. For ultrafast experiments, free electron lasers (FELs) deliver 10 orders of magnitude higher peak brightness than storage rings. Our ability to utilize these ultrabright sources, however, is limited by our ability to focus, monochromate, and manipulate these beams with X-ray optics. X-ray optics technology unfortunately lags behind source technology and limits our ability to maximally utilize even today’s X-ray sources. With ever more powerful X-ray sources on the horizon, a new generation of X-ray optics must be developed that will allow us to fully utilize these beams of unprecedented brightness.

The increasing brightness of X-ray sources will enable a new generation of measurements that could have revolutionary impact across a broad area of science, if optical systems necessary for transporting and analyzing X-rays can be perfected. The high coherent flux will facilitate new science utilizing techniques in imaging, dynamics, and ultrahigh-resolution spectroscopy. For example, zone-plate-based hard X-ray microscopes are presently used to look deeply into materials, but today’s resolution and contrast are restricted by limitations of the current lithography used to manufacture nanodiffractive optics. The large penetration length, combined in principle with very high spatial resolution, is an ideal probe of hierarchically ordered mesoscale materials, if zone-plate focusing systems can be improved. Resonant inelastic X-ray scattering (RIXS) probes a wide range of excitations in materials, from charge-transfer processes to the very soft excitations that cause the collective phenomena in correlated electronic systems. However, although RIXS can probe high-energy excitations, the most exciting and potentially revolutionary science involves soft excitations such as magnons and phonons; in general, these are well below the resolution that can be probed by today’s optical systems. The study of these low-energy excitations will only move forward if advances are made in high-resolution gratings for the soft X-ray energy region, and higher-resolution crystal analyzers for the hard X-ray region. In almost all the forefront areas of X-ray science today, the main limitation is our ability to focus, monochromate, and manipulate X-rays at the level required for these advanced measurements.

To address these issues, the U.S. Department of Energy (DOE) Office of Basic Energy Sciences (BES) sponsored a workshop, X-ray Optics for BES Light Source Facilities, which was held March 27–29, 2013, near Washington, D.C. The workshop addressed a wide range of technical and organizational issues. Eleven working groups were formed in advance of the meeting and sought over several months to define the most pressing problems and emerging opportunities and to propose the best routes forward for a focused R&D program to solve these problems. The workshop participants identified eight principal research directions (PRDs), as follows:

- Development of advanced grating lithography and manufacturing for high-energy resolution techniques such as soft X-ray inelastic scattering.

- Development of higher-precision mirrors for brightness preservation through the use of advanced metrology in manufacturing, improvements in manufacturing techniques, and in mechanical mounting and cooling.

- Development of higher-accuracy optical metrology that can be used in manufacturing, verification, and testing of optomechanical systems, as well as at wavelength metrology that can be used for quantification of individual optics and alignment and testing of beamlines.

- Development of an integrated optical modeling and design framework that is designed and maintained specifically for X-ray optics.

- Development of nanolithographic techniques for improved spatial resolution and efficiency of zone plates.

- Development of large, perfect single crystals of materials other than silicon for use as beam splitters, seeding monochromators, and high-resolution analyzers.

- Development of improved thin-film deposition methods for fabrication of multilayer Laue lenses and high-spectral-resolution multilayer gratings.

- Development of supports, actuator technologies, algorithms, and controls to provide fully integrated and robust adaptive X-ray optic systems.

- Development of fabrication processes for refractive lenses in materials other than silicon.

The workshop participants also addressed two important nontechnical areas: our relationship with industry and organization of optics within the light source facilities. Optimization of activities within these two areas could have an important effect on the effectiveness and efficiency of our overall endeavor. These are crosscutting managerial issues that we identified as areas that needed further in-depth study, but they need to be coordinated above the individual facilities.

Finally, an issue that cuts across many of the optics improvements listed above is routine access to beamlines that ideally are fully dedicated to optics research and/or development. The success of the BES X-ray user facilities in serving a rapidly increasing user community has led to a squeezing of beam time for vital instrumentation activities. Dedicated development beamlines could be shared with other R&D activities, such as detector programs and novel instrument development.

In summary, to meet the challenges of providing the highest-quality X-ray beams for users and to fully utilize the high-brightness sources of today and those that are on the horizon, it will be critical to make strategic investments in X-ray optics R&D. This report can provide guidance and direction for effective use of investments in the field of X-ray optics and potential approaches to develop a better-coordinated program of X-ray optics development within the suite of BES synchrotron radiation facilities. Due to the importance and complexity of the field, the need for tight coordination between BES light source facilities and with industry, as well as the rapid evolution of light source capabilities the workshop participants recommend holding similar workshops at least biannually.

Neutron and X-ray Detectors

The Basic Energy Sciences (BES) X-ray and neutron user facilities attract more than 12,000 researchers each year to perform cutting-edge science at these state-of-the-art sources. While impressive breakthroughs in X-ray and neutron sources give us the powerful illumination needed to peer into the nano- to mesoscale world, a stumbling block continues to be the distinct lag in detector development, which is slowing progress toward data collection and analysis. Urgently needed detector improvements would reveal chemical composition and bonding in 3-D and in real time, allow researchers to watch “movies” of essential life processes as they happen, and make much more efficient use of every X-ray and neutron produced by the source

The immense scientific potential that will come from better detectors has triggered worldwide activity in this area. Europe in particular has made impressive strides, outpacing the United States on several fronts. Maintaining a vital U.S. leadership in this key research endeavor will require targeted investments in detector R&D and infrastructure.

To clarify the gap between detector development and source advances, and to identify opportunities to maximize the scientific impact of BES user facilities, a workshop on Neutron and X-ray Detectors was held August 1-3, 2012, in Gaithersburg, Maryland. Participants from universities, national laboratories, and commercial organizations from the United States and around the globe participated in plenary sessions, breakout groups, and joint open-discussion summary sessions.

Sources have become immensely more powerful and are now brighter (more particles focused onto the sample per second) and more precise (higher spatial, spectral, and temporal resolution). To fully utilize these source advances, detectors must become faster, more efficient, and more discriminating. In supporting the mission of today’s cutting-edge neutron and X-ray sources, the workshop identified six detector research challenges (and two computing hurdles that result from the corresponding increase in data volume) for the detector community to overcome in order to realize the full potential of BES neutron and X-ray facilities.

Resolving these detector impediments will improve scientific productivity both by enabling new types of experiments, which will expand the scientific breadth at the X-ray and neutron facilities, and by potentially reducing the beam time required for a given experiment. These research priorities are summarized in the table below. Note that multiple, simultaneous detector improvements are often required to take full advantage of brighter sources.

High-efficiency hard X-ray sensors: The fraction of incident particles that are actually detected defines detector efficiency. Silicon, the most common direct-detection X-ray sensor material, is (for typical sensor thicknesses) 100% efficient at 8 keV, 25%efficient at 20 keV, and only 3% efficient at 50 keV. Other materials are needed for hard X-rays.

Replacement for 3He for neutron detectors: 3He has long been the neutron detection medium of choice because of its high cross section over a wide neutron energy range for the reaction 3He + n —> 3H + 1H + 0.764 MeV. 3He stockpiles are rapidly dwindling, and what is available can be had only at prohibitively high prices. Doped scintillators hold promise as ways to capture neutrons and convert them into light, although work is needed on brighter, more efficient scintillator solutions. Neutron detectors also require advances in speed and resolution.

Fast-framing X-ray detectors: Today’s brighter X-ray sources make time-resolved studies possible. For example, hybrid X-ray pixel detectors, initially developed for particle physics, are becoming fairly mature X-ray detectors, with considerable development in Europe. To truly enable time-resolved studies, higher frame rates and dynamic range are required, and smaller pixel sizes are desirable.

High-speed spectroscopic X-ray detectors: Improvements in the readout speed and energy resolution of X-ray detectors are essential to enable chemically sensitive microscopies. Advances would make it possible to take images with simultaneous spatial and chemical information.

Very high-energy-resolution X-ray detectors: The energy resolution of semiconductor detectors, while suitable for a wide range of applications, is far less than what can be achieved with X-ray optics. A direct detector that could rival the energy resolution of optics could dramatically improve the efficiency of a multitude of experiments, as experiments are often repeated at a number of different energies. Very high-energy-resolution detectors could make these experiments parallel, rather than serial.

Low-background, high-spatial-resolution neutron detectors: Low-background detectors would significantly improve experiments that probe excitations (phonons, spin excitations, rotation, and diffusion in polymers and molecular substances, etc.) in condensed matter. Improved spatial resolution would greatly benefit radiography, tomography, phase-contrast imaging, and holography.

Improved acquisition and visualization tools: In the past, with the limited variety of slow detectors, it was straightforward to visualize data as it was being acquired (and adjust experimental conditions accordingly) to create a compact data set that the user could easily transport. As detector complexity and data rates explode, this becomes much more challenging. Three goals were identified as important for coping with the growing data volume from high-speed detectors:

- Facilitate better algorithm development. In particular, algorithms that can minimize the quantity of data stored.

- Improve community-driven mechanisms to reduce data protocols and enhance quantitative, interactive visualization tools.

- Develop and distribute community-developed, detector-specific simulation tools.

- Aim for parallelization to take advantage of high-performance analysis platforms.

The diversity of detector improvements required is necessarily as broad as the range of scientific experimentation at BES facilities. This workshop identified a variety of avenues by which detector R&D can enable enhanced science at BES facilities. The Research Directions listed above will be addressed by focused R&D and detector engineering, both of which require specialized infrastructure and skills. While U.S. leadership in neutron and X-ray detectors lags behind other countries in several areas, significant talent exists across the complex. A forum of technical experts, facilities management, and BES could be a venue to provide further definition.

From Quanta to the Continuum: Opportunities for Mesoscale Science

We are at a time of unprecedented challenge and opportunity. Our economy is in need of a jump start, and our supply of clean energy needs to dramatically increase. Innovation through basic research is a key means for addressing both of these challenges. The great scientific advances of the last decade and more, especially at the nanoscale, are ripe for exploitation. Seizing this key opportunity requires mastering the mesoscale, where classical, quantum, and nanoscale science meet. It has become clear that—in many important areas—the functionality that is critical to macroscopic behavior begins to manifest itself not at the atomic or nanoscale but at the mesoscale, where defects, interfaces, and non-equilibrium structures are the norm. With our recently acquired knowledge of the rules of nature that govern the atomic and nanoscales, we are well positioned to unravel and control the complexity that determines functionality at the mesoscale. The reward for breakthroughs in our understanding at the mesoscale is the emergence of previously unrealized functionality. The present report explores the opportunity and defines the research agenda for mesoscale science—discovering, understanding, and controlling interactions among disparate systems and phenomena to reach the full potential of materials complexity and functionality. The ability to predict and control mesoscale phenomena and architectures is essential if atomic and molecular knowledge is to blossom into a next generation of technology opportunities, societal benefits, and scientific advances.

|

Mesoscale science and technology opportunities build on the enormous foundation of nanoscience that the scientific community has created over the last decade and continues to create. New features arise naturally in the transition to the mesoscale, including the emergence of collective behavior; the interaction of disparate electronic, mechanical, magnetic, and chemical phenomena; the appearance of defects, interfaces and statistical variation; and the self assembly of functional composite systems. The mesoscale represents a discovery laboratory for finding new science, a self-assembly foundry for creating new functional systems, and a design engine for new technologies.

The last half-century and especially the last decade have witnessed a remarkable drive to ever smaller scales, exposing the atomic, molecular, and nanoscale structures that anchor the macroscopic materials and phenomena we deal with every day. Given this knowledge and capability, we are now starting the climb up from the atomic and nanoscale to the greater complexity and wider horizons of the mesoscale. The constructionist path up from atomic and nanoscale to mesoscale holds a different kind of promise than the reductionist path down: it allows us to re-arrange the nanoscale building blocks into new combinations, exploit the dynamics and kinetics of these new coupled interactions, and create qualitatively different mesoscale architectures and phenomena leading to new functionality and ultimately new technology. The reductionist journey to smaller length and time scales gave us sophisticated observational tools and intellectual understanding that we can now apply with great advantage to the wide opportunity of mesoscale science following a bottom-up approach.

Realizing the mesoscale opportunity requires advances not only in our knowledge but also in our ability to observe, characterize, simulate, and ultimately control matter. Mastering mesoscale materials and phenomena requires the seamless integration of theory, modeling, and simulation with synthesis and characterization. The inherent complexity of mesoscale phenomena, often including many nanoscale structural or functional units, requires theory and simulation spanning multiple space and time scales. In mesoscale architectures the positions of individual atoms are often no longer relevant, requiring new simulation approaches beyond density functional theory and molecular dynamics that are so successful at atomic scales. New organizing principles that describe emergent mesoscale phenomena arising from many coupled and competing degrees of freedom wait to be discovered and applied. Measurements that are dynamic, in situ, and multimodal are needed to capture the sequential phenomena of composite mesoscale materials. Finally, the ability to design and realize the complex materials we imagine will require qualitative advances in how we synthesize and fabricate materials and how we manage their metastability and degradation over time. We must move from serendipitous to directed discovery, and we must master the art of assembling structural and functional nanoscale units into larger architectures that create a higher level of complex functional systems.

While the challenge of discovering, controlling, and manipulating complex mesoscale architectures and phenomena to realize new functionality is immense, success in the pursuit of these research directions will have outcomes with the potential to transform society. The body of this report outlines the need, the opportunities, the challenges, and the benefits of mastering mesoscale science.

Data and Communications in Basic Energy Sciences: Creating a Pathway for Scientific Discovery

This report is based on the Department of Energy (DOE) Workshop on “Data and Communications in Basic Energy Sciences: Creating a Pathway for Scientific Discovery” that was held at the Bethesda Marriott in Maryland on October 24-25, 2011. The workshop brought together leading researchers from the Basic Energy Sciences (BES) facilities and Advanced Scientific Computing Research (ASCR). The workshop was co-sponsored by these two Offices to identify opportunities and needs for data analysis, ownership, storage, mining, provenance and data transfer at light sources, neutron sources, microscopy centers and other facilities.

Their charge was to identify current and anticipated issues in the acquisition, analysis, communication and storage of experimental data that could impact the progress of scientific discovery, ascertain what knowledge, methods and tools are needed to mitigate present and projected shortcomings and to create the foundation for information exchanges and collaboration between ASCR and BES supported researchers and facilities.

The workshop was organized in the context of the impending data tsunami that will be produced by DOE’s BES facilities. Current facilities, like SLAC National Accelerator Laboratory’s Linac Coherent Light Source, can produce up to 18 terabytes (TB) per day, while upgraded detectors at Lawrence Berkeley National Laboratory’s Advanced Light Source will generate ~10TB per hour. The expectation is that these rates will increase by over an order of magnitude in the coming decade. The urgency to develop new strategies and methods in order to stay ahead of this deluge and extract the most science from these facilities was recognized by all. The four focus areas addressed in this workshop were:

- Workflow Management - Experiment to Science: Identifying and managing the data path from experiment to publication.

- Theory and Algorithms: Recognizing the need for new tools for computation at scale, supporting large data sets and realistic theoretical models.

- Visualization and Analysis: Supporting near-real-time feedback for experiment optimization and new ways to extract and communicate critical information from large data sets.

- Data Processing and Management: Outlining needs in computational and communication approaches and infrastructure needed to handle unprecedented data volume and information content.

It should be noted that almost all participants recognized that there were unlikely to be any turn-key solutions available due to the unique, diverse nature of the BES community, where research at adjacent beamlines at a given light source facility often span everything from biology to materials science to chemistry using scattering, imaging and/or spectroscopy. However, it was also noted that advances supported by other programs in data research, methodologies, and tool development could be implemented on reasonable time scales with modest effort. Adapting available standard file formats, robust workflows, and in-situ analysis tools for user facility needs could pay long-term dividends.

Workshop participants assessed current requirements as well as future challenges and made the following recommendations in order to achieve the ultimate goal of enabling transformative science in current and future BES facilities:

Theory and analysis components should be integrated seamlessly within experimental workflow.

Develop new algorithms for data analysis based on common data formats and toolsets.

Move analysis closer to experiment.

Move the analysis closer to the experiment to enable real-time (in-situ) streaming capabilities, live visualization of the experiment and an increase of the overall experimental efficiency.

Match data management access and capabilities with advancements in detectors and sources.

Remove bottlenecks, provide interoperability across different facilities/beamlines and apply forefront mathematical techniques to more efficiently extract science from the experiments.

This workshop report examines and reviews the status of several BES facilities and highlights the successes and shortcomings of the current data and communication pathways for scientific discovery. It then ascertains what methods and tools are needed to mitigate present and projected data bottlenecks to science over the next 10 years. The goal of this report is to create the foundation for information exchanges and collaborations among ASCR and BES supported researchers, the BES scientific user facilities, and ASCR computing and networking facilities.

To jumpstart these activities, there was a strong desire to see a joint effort between ASCR and BES along the lines of the highly successful Scientific Discovery through Advanced Computing (SciDAC) program in which integrated teams of engineers, scientists and computer scientists were engaged to tackle a complete end-to-end workflow solution at one or more beamlines, to ascertain what challenges will need to be addressed in order to handle future increases in data

Research Needs and Impacts in Predictive Simulation for Internal Combustion Engines (PreSICE)

This report is based on a SC/EERE Workshop to Identify Research Needs and Impacts in Predictive Simulation for Internal Combustion Engines (PreSICE), held March 3, 2011, to determine strategic focus areas that will accelerate innovation in engine design to meet national goals in transportation efficiency.

The U.S. has reached a pivotal moment when pressures of energy security, climate change, and economic competitiveness converge. Oil prices remain volatile and have exceeded $100 per barrel twice in five years. At these prices, the U.S. spends $1 billion per day on imported oil to meet our energy demands. Because the transportation sector accounts for two-thirds of our petroleum use, energy security is deeply entangled with our transportation needs. At the same time, transportation produces one-quarter of the nation’s carbon dioxide output. Increasing the efficiency of internal combustion engines is a technologically proven and cost-effective approach to dramatically improving the fuel economy of the nation’s fleet of vehicles in the near- to mid-term, with the corresponding benefits of reducing our dependence on foreign oil and reducing carbon emissions. Because of their relatively low cost, high performance, and ability to utilize renewable fuels, internal combustion engines—including those in hybrid vehicles—will continue to be critical to our transportation infrastructure for decades. Achievable advances in engine technology can improve the fuel economy of automobiles by over 50% and trucks by over 30%. Achieving these goals will require the transportation sector to compress its product development cycle for cleaner, more efficient engine technologies by 50% while simultaneously exploring innovative design space. Concurrently, fuels will also be evolving, adding another layer of complexity and further highlighting the need for efficient product development cycles. Current design processes, using “build and test” prototype engineering, will not suffice. Current market penetration of new engine technologies is simply too slow—it must be dramatically accelerated.

These challenges present a unique opportunity to marshal U.S. leadership in science-based simulation to develop predictive computational design tools for use by the transportation industry. The use of predictive simulation tools for enhancing combustion engine performance will shrink engine development timescales, accelerate time to market, and reduce development costs, while ensuring the timely achievement of energy security and emissions targets and enhancing U.S. industrial competitiveness.

In 2007 Cummins achieved a milestone in engine design by bringing a diesel engine to market solely with computer modeling and analysis tools. The only testing was after the fact to confirm performance. Cummins achieved a reduction in development time and cost. As important, they realized a more robust design, improved fuel economy, and met all environmental and customer constraints. This important first step demonstrates the potential for computational engine design. But, the daunting complexity of engine combustion and the revolutionary increases in efficiency needed require the development of simulation codes and computation platforms far more advanced than those available today.

Based on these needs, a Workshop to Identify Research Needs and Impacts in Predictive Simulation for Internal Combustion Engines (PreSICE) convened over 60 U.S. leaders in the engine combustion field from industry, academia, and national laboratories to focus on two critical areas of advanced simulation, as identified by the U.S. automotive and engine industries. First, modern engines require precise control of the injection of a broad variety of fuels that is far more subtle than achievable to date and that can be obtained only through predictive modeling and simulation. Second, the simulation, understanding, and control of these stochastic in-cylinder combustion processes lie on the critical path to realizing more efficient engines with greater power density. Fuel sprays set the initial conditions for combustion in essentially all future transportation engines; yet today designers primarily use empirical methods that limit the efficiency achievable. Three primary spray topics were identified as focus areas in the workshop:

- The fuel delivery system, which includes fuel manifolds and internal injector flow,

- The multi-phase fuel–air mixing in the combustion chamber of the engine, and

- The heat transfer and fluid interactions with cylinder walls.

Current understanding and modeling capability of stochastic processes in engines remains limited and prevents designers from achieving significantly higher fuel economy. To improve this situation, the workshop participants identified three focus areas for stochastic processes:

- Improve fundamental understanding that will help to establish and characterize the physical causes of stochastic events,

- Develop physics-based simulation models that are accurate and sensitive enough to capture performance-limiting variability, and

- Quantify and manage uncertainty in model parameters and boundary conditions.

Improved models and understanding in these areas will allow designers to develop engines with reduced design margins and that operate reliably in more efficient regimes. All of these areas require improved basic understanding, high-fidelity model development, and rigorous model validation. These advances will greatly reduce the uncertainties in current models and improve understanding of sprays and fuel–air mixture preparation that limit the investigation and development of advanced combustion technologies.

The two strategic focus areas have distinctive characteristics but are inherently coupled. Coordinated activities in basic experiments, fundamental simulations, and engineering-level model development and validation can be used to successfully address all of the topics identified in the PreSICE workshop. The outcome will be:

- New and deeper understanding of the relevant fundamental physical and chemical processes in advanced combustion technologies,

- Implementation of this understanding into models and simulation tools appropriate for both exploration and design, and

- Sufficient validation with uncertainty quantification to provide confidence in the simulation results.

These outcomes will provide the design tools for industry to reduce development time by up to 30% and improve engine efficiencies by 30% to 50%. The improved efficiencies applied to the national mix of transportation applications have the potential to save over 5 million barrels of oil per day, a current cost savings of $500 million per day.

Report of the Basic Energy Sciences Workshop on Compact Light Sources