Scientists Compute Death Throes of White Dwarf Star in 3D Simulations Using DoE Supercomputers

- Two ASCR Funded Research Projects Win Prestigious R&D 100 Awards

- 2007 Fernbach Award - David Keyes

- ASCR Researchers Win Emerald Honors Award

- Scientists Compute Death Throes of White Dwarf Star in 3D Simulations Using Department of Energy Supercomputers

White dwarf stars pack one and a half times the mass of the sun into an object the size of Earth. When they burn out, the ensuing explosion produces a type of supernova that astrophysicists believe manufactures most of the iron in the universe. However, these Type Ia supernovas, as they are called, may also help illuminate the mystery of dark energy, an unknown force that dominates the universe.

“That will only be possible if we can gain a much better understanding of the way in which these stars explode,” said Don Lamb, Director of the University of Chicago’s Center for Astrophysical Thermonuclear Flashes.

Scientists for years have attempted to blow up a white dwarf star by writing the laws of physics into computer software and then testing it in detailed computational simulations. One of the key questions facing astrophysicists is the triggering mechanism that actually causes the supernova to explode. At first, the detonations would only occur if inserted manually into the programs. Then the Flash team naturally detonated white dwarf stars in simplified, two-dimensional tests, but “there were claims made that it wouldn’t work in 3D”, Lamb said.

Nevertheless, in January, the Flash Center team for the first time naturally detonated a white dwarf in a more realistic three-dimensional simulation using supercomputers operated by the U.S. Department of Energy (DOE). The simulation confirmed what the team already suspected from previous tests: that the stars detonate in a supersonic process resembling diesel-engine combustion.

Unlike a gasoline engine, in which a spark ignites the fuel, compression triggers ignition in a diesel engine. “You don’t want supersonic burning in a car engine, but the triggering is similar,” said Dean Townsley, a Research Associate at the Joint Institute for Nuclear Astrophysics at Chicago.

The temperatures attained by a detonating white dwarf star make the 10,000-degree surface of the sun seem like a cold winter day in Chicago by comparison. “In nuclear explosions, you deal with temperatures on the order of a billion degrees,” said Flash Center Research Associate Cal Jordan.

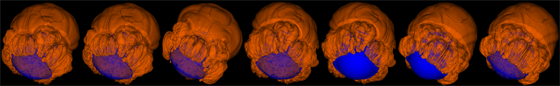

The new 3D white dwarf simulation shows the formation of a flame bubble near the center of the star. The bubble, initially measuring approximately 10 miles in diameter, rises more than 1,200 miles to the surface of the star in one second. In another second, the flame crashes into itself on the opposite end of the star, triggering a detonation. “It seems that the dynamics of the collision is what creates a localized compression region where the detonation will manifest,” Townsley said.

This process plays out in no more than three seconds, but the simulations take considerably longer. The Flash Center team ran its massive simulation on two powerful supercomputers at DOE’s Lawrence Livermore and Lawrence Berkeley national laboratories in California. Just one of the jobs ran for 75 hours on 768 computer processors, for a total of 58,000 hours.

“I cannot say enough about the support we received from the high-performance computing teams at Lawrence Livermore and Lawrence Berkeley national laboratories,” Lamb said. “Without their help, we would never have been able to do the simulations.”

Support for the simulations to be presented at Santa Barbara was provided by two separate DOE programs: the Advanced Simulation and Computing program, which has provided funding and computer time to the Flash Center for nearly a decade, and INCITE (Innovative and Novel Computation Impact on Theory and Experiment) of the Office of Science, which has provided computer time.

The simulations are so demanding�97;the Flash team calls it “extreme computing”�97;that they monopolize DOE’s powerful computers during the allocated time.

Nevertheless, the scientific payoff for logging these long, stressful hours is potentially huge. Astrophysicists value type Ia supernovas because they all seem to explode with approximately the same intensity. Calibrating these explosions according to their distance reveals how fast the universe has been expanding at various times during its long history.

In the late 1990s, supernova measurements revealed that the expansion of the universe is accelerating. Not knowing what force was working against gravity to cause this expansion, scientists began calling it “dark energy.” The Flash Center simulations may help astrophysicists make better calibrations to adjust for the minor variation that they believe occurs from one supernova to the next.

“To make extremely precise statements about the nature of dark energy and cosmological expansion, you have to be able to understand the nature of that variation,” Fisher said.

Telescopic images of the two supernovas closest to Earth seem match the Flash team’s findings. The images of both supernovas show a sphere with a cap blown off the end.

“In our model, we have a rising bubble that pops out of the top. It’s very suggestive,” Jordan said.

Contact: Steve Koppes. ( skoppes@uchicago.edu) 773-702-8366

Phani Nukala has been a research staff member in Computational Materials Sciences at Oak Ridge National Laboratory since 2001; he received his Ph.D. in Civil Engineering from Purdue University. His research interests include computational mechanics and materials science, statistical physics, multi-scale methods, bio-inspired materials, and high-performance scientific computing. A proposal he led, "Large Scale Simulations of Fracture in Disordered Media: Statistical Physics of Fracture" was selected to receive a 2007 Department of Energy Innovative and Novel Computational Impact on Theory and Experiment (INCITE) award.

Phani Nukala has been a research staff member in Computational Materials Sciences at Oak Ridge National Laboratory since 2001; he received his Ph.D. in Civil Engineering from Purdue University. His research interests include computational mechanics and materials science, statistical physics, multi-scale methods, bio-inspired materials, and high-performance scientific computing. A proposal he led, "Large Scale Simulations of Fracture in Disordered Media: Statistical Physics of Fracture" was selected to receive a 2007 Department of Energy Innovative and Novel Computational Impact on Theory and Experiment (INCITE) award.

Dean Williams, principal investigator for the SciDAC project "Scaling the Earth System Grid to Petascale Data Center for Enabling Technologies" has been the lead computer scientist for the Program for Climate Model Diagnosis and Intercomparison (PCMDI) at Lawrence Livermore National Laboratory for the last 18 years, designing and developing data analysis tools and visualization. He is the also the Deputy Division Leader of the Biology Atmosphere, Chemistry and Earth (BACE) Division at LLNL. Williams' career has been focused on unifying the scientists of the climate change community by providing tools, data, and computing to enable scientific discovery.

Dean Williams, principal investigator for the SciDAC project "Scaling the Earth System Grid to Petascale Data Center for Enabling Technologies" has been the lead computer scientist for the Program for Climate Model Diagnosis and Intercomparison (PCMDI) at Lawrence Livermore National Laboratory for the last 18 years, designing and developing data analysis tools and visualization. He is the also the Deputy Division Leader of the Biology Atmosphere, Chemistry and Earth (BACE) Division at LLNL. Williams' career has been focused on unifying the scientists of the climate change community by providing tools, data, and computing to enable scientific discovery.

David Keyes, an applied mathematician with a long history of involvement in ASCR research, was named the recipient of the 2007 Sidney Fernbach Award. The Fernbach award was established in 1992 in memory of Sidney Fernbach, a pioneer in the development and application of high performance computers, and is given by the IEEE Computer Society for innovative uses of high-performance computing in problem solving. Keyes was designated the 2007 award recipient in recognition of his outstanding contributions to the development of scalable numerical algorithms for the solution of nonlinear partial differential equations as well as his exceptional leadership in high-performance computation. The award was presented at SC07, the international conference for high-performance computing, networking, storage and analysis held November 10-16, 2007 in Reno, NV. Keyes gave a plenary lecture at the conference as part of a special awards session. More>

David Keyes, an applied mathematician with a long history of involvement in ASCR research, was named the recipient of the 2007 Sidney Fernbach Award. The Fernbach award was established in 1992 in memory of Sidney Fernbach, a pioneer in the development and application of high performance computers, and is given by the IEEE Computer Society for innovative uses of high-performance computing in problem solving. Keyes was designated the 2007 award recipient in recognition of his outstanding contributions to the development of scalable numerical algorithms for the solution of nonlinear partial differential equations as well as his exceptional leadership in high-performance computation. The award was presented at SC07, the international conference for high-performance computing, networking, storage and analysis held November 10-16, 2007 in Reno, NV. Keyes gave a plenary lecture at the conference as part of a special awards session. More>

Win Prestigious R&D 100 Awards

Two ASCR funded projects received R&D Magazine's R&D 100 Awards. The awards have been called the “Oscars of invention". The first, from Argonne National Laboratory, was Access Grid 3.0. A collaborative environment in which users at many locations can see and hear each other as if they were all in the same room.  It is more than just videoconferencing, it enables participants to share and interact with files and applications. The open nature of the Access Grid software has attracted thousands of users from around the world encouraging numerous commercial and research institutions to extend the software for their purposes. Select this link to learn more.

It is more than just videoconferencing, it enables participants to share and interact with files and applications. The open nature of the Access Grid software has attracted thousands of users from around the world encouraging numerous commercial and research institutions to extend the software for their purposes. Select this link to learn more.

The other award winning research project from Lawrence Livermore National Laboratory was Hypre - “High-performance preconditioners”. The project, lead by Rob Falgout, is a software library unique in its ability to provide solution algorithms that are effective on a wide variety of problems, easily accessible using multiple user interfaces and effectively exploit the full computational power of today’s high performance computers. Hypre provides linear solver algorithms that are developed specifically to be scalable on large numbers of processors. Simulations that previously took days can now be run in hours or less. Select this link to learn more.