40 Years of Research Milestones

2012 - Finding the Higgs Boson, the Last Piece of the Standard Model of Physics

The Standard Model of physics, which explains how particles interact, was missing a major piece until quite recently. Scientists' difficulty finding it was throwing the entire model into question. In these landmark 2012 papers, scientists described detecting the missing piece, called the Higgs boson. While previous experiments set limits on where scientists should looks, these findings from the ATLAS and CMS experiments at the Large Hadron Collider were the first to identify a specific particle that matched all of the necessary characteristics. These experimental observations led to the 2013 Nobel Prize in Physics. In addition to completing the Standard Model, the presence of the Higgs boson also explains how the building blocks of our universe have mass..

G. Aad, et al. (ATLAS Collaboration), "Observation of a new particle in the search for the Standard Model Higgs Boson with the ATLAS Detector at the LHC." Physics Letters B 716, 1-29 (2012). [DOI: 10.1016/j.physletb.2012.08.020]

S. Chatrchyan, et al. (CMS Collaboration), "Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC." Physics Letters B 716, 30-61 (2012). [DOI: 10.1016/j.physletb.2012.08.021] (Image credit: Lawrence Livermore National Laboratory)

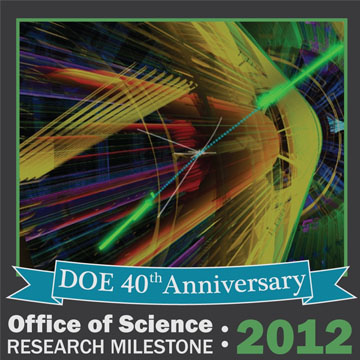

2011 - Setting the Cell in Motion

Getting to the atomic order of incredibly complex structures buried in the fatty membranes of cells isn't easy. In fact, the search for the structure of the G-protein-coupled receptors, which detect chemicals such as adrenaline, has consumed the better part of two decades. This landmark 2011 paper described, for the first time, the atomic structure of a G-protein-coupled receptor at work. This discovery was made possible by DOE's Advanced Photon Source. In this case, the team determined the structure of the receptor in the act of turning on a G-protein inside a cell. The receptor activates a G-protein based on the chemical it detects. That is, it conveys different messages to different cells based on what it detects. The receptors are involved in numerous responses to hormones and neurotransmitters throughout the body. Therefore, the receptors are a popular target for drugs. Research into the receptors earned Brian Kobilka as well as Robert Lefkowitz the 2012 Nobel Prize in Chemistry.

S.G.F. Rasmussen, B.T. DeVree, Y. Zou, A.C. Kruse, K.Y. Chung, T.S. Kobilka, F.S. Thian, P.S. Chae, E. Pardon, D. Calinski, J.M. Mathiesen, S.T.A Shah, J.A. Lyons, M. Caffrey, S.H. Gellman, J. Steyaert, G. Skiniotis, W.I. Weis, R.K. Sunahara, and B.K. Kobilka, "Crystal structure of the β2 adrenergic receptor–Gs protein complex." Nature 477, 549-555 (2011). [DOI: 10.1038/nature10361]. Subscription required: contact your local librarian for access. (Image credit: Adapted by permission from Macmillan Publishers Ltd: Nature (see citation), ©2011)

2011 - Creating an Electricity Sponge

Supercapacitors can store electrical energy quickly, release it superfast and last for thousands of recharges. Even with all of these advantages, supercapacitors aren't widely used. Why? They simply don't have as much storage capacity as batteries. This landmark 2011 paper offered a near atomic-resolution view of a new material that let a supercapacitor store more energy – with an energy density nearly equal to a lead-acid battery. This material – called activated graphene – had more surface area than other forms of graphene. The surface area was the result of the formation of a network of tiny pores, some just a nanometer wide. The pore walls were made of carbon just one atom thick. In some ways, the structure resembled inside-out buckyballs. The incredibly detailed view of the activated graphene material was created using microscopes and other instruments at three DOE scientific user facilities.

Y. Zhu, S. Murali, M.D. Stoller, K.J. Ganesh, W. Cai, P.J. Ferreira, A. Pirkle, R.M. Wallace, K.A. Cychosz, M. Thommes, D. Su, E.A. Stach, and R.S. Ruoff, "Carbon-based supercapacitors produced by activation of graphene." Science 323(6037), 1537-1541 (2011). [DOI: 10.1126/science.1200770]. Subscription required: contact your local librarian for access. (Photo credit: Brookhaven National Laboratory)

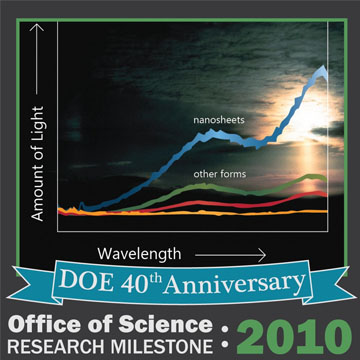

2010 - Changing up the Sheets and Electrons

In 2010, scientists were starting to build ultrathin sheets of materials. The sheets had far different properties than their larger counterparts. In these landmark 2010 papers, scientists described two properties of an amazing ultrathin sheet made from a layer of molybdenum atoms sandwiched between one-atom-thick sulfur layers. In the tiny sheets, electrons were more easily freed to conduct a current than in a larger block of the molybdenum-based material. This change in electron motion also let the material give off large quantities of light. The work offered new ways to control electrons. It opened doors for solar energy and computers, including making the smallest transistor ever.

K.F. Mak, C. Lee, J. Hone, J. Shan, and T.F. Heinz, "Atomically thin MoS2: A new direct-gap semiconductor." Physical Review Letters 105, 136805 (2010). [DOI: 10.1103/PhysRevLett.105.136805]. Subscription required: contact your local librarian for access.

A. Splendiani, L. Sun, Y. Zhang, T. Li, J. Kim, C.Y. Chim, G. Galli, and F. Wang, "Emerging photoluminescence in monolayer MoS2." Nano Letters 10, 1271-1276 (2010). [DOI: 10.1021/nl903868w]. Subscription required: contact your local librarian for access. (Image credit: Claire Ballweg, DOE)

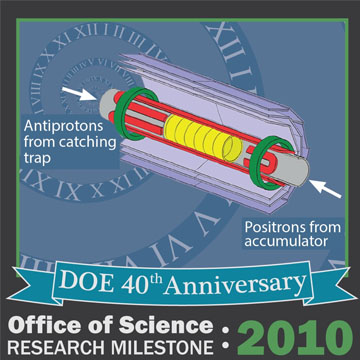

2010 - Trapped Antihydrogen

If you can't contain it, you can't study it. That's the case with antimatter, an elusive substance that may hold secrets to matter, time and the universe. So far experiments, including work at CERN in Switzerland, had failed to confine antihydrogen. In this landmark 2010 paper, Gorm Andresen and his colleagues described the first experiments that trapped 38 antihydrogen atoms. Their work was enabled by plasma physics research and has opened the door to precise measurements of antihydrogen and other antimatter atoms.

G.B. Andresen, et al., "Trapped antihydrogen." Nature 468, 673-676 (2010). [DOI: 10.1038/nature09610]. Subscription required: contact your local librarian for access. (Image credit: Adapted by permission from Macmillan Publishers Ltd: Nature, ©2010)

2010 - Variety Is the Spice of MOFs

It's hard to hold a sparrow in a cage built for an elephant. The size and structure of the cage matter. The same is true when building molecular cages to capture and hold carbon dioxide or other gases. The problem scientists had was that they lacked the building materials necessary to create the right cages. In this landmark 2010 paper, scientists demonstrated new building materials to create molecular cages. These materials were essentially bars of different lengths. The bars, or linkers, were connected together to create intricately shaped cages, or metal-organic frameworks. The change in structure of these porous frameworks yielded impressive results. In one case, a framework made with linkers of different lengths was 400 percent better at capturing carbon dioxide than the best metal-organic framework (MOF) made from just one type of linker and the new framework ignored unwanted carbon monoxide.

H. Deng, C.J. Doonan, H. Furukawa, R.B. Ferreira, J. Towne, C.B. Knobler, B. Wang, and O.M. Yaghi, "Multiple functional groups of varying ratios in metal-organic frameworks." Science 327, 846-850 (2010). [DOI: 10.1126/science.1181761]. Subscription required: contact your local librarian for access. (Image credit: Boasap, Wikipedia [GFDL or CC-BY-SA-3.0] via Wikimedia Commons)

2010 - Search for the "Island of Stability" Passes through Tennessine

"Super heavy" elements are missing near the bottom of the periodic table. The elements aren't lost; they simply haven't been and probably won't be found in nature. Although a challenging task, scientists have created a number of these elements over the years. In this landmark 2010 paper, a U.S./Russian team reports the creation of element 117, called Tennessine. The second heaviest element to date, packed with 117 protons, Tennessine points to an "island of stability." On this isle, a region of the periodic chart, elements with the "magic number" of protons and neutrons become long-lived. While Tennessine, with a half-life of about 50 thousandths of a second, doesn't prove the island exists, its behavior offers encouraging signs as the hunt for the isle continues.

Y.T. Oganessian, et al., "Synthesis of a new element with atomic number Z=117." Physical Review Letters 104, 142502 (2010). [DOI: 10.1103/PhysRevLett.104.142502]. Subscription required: contact your local librarian for access. (Image credit: Oak Ridge National Laboratory)

2009 - A CHARMMing Computational Microscope

Tracking atoms and electrons in computer simulations created a tug of war between classical physics, which was simple to calculate but not detailed, and quantum physics, which was slow but detailed. This landmark 2009 paper described a software that married classical and quantum physics to simulate reactions. The result? Faster, higher resolution views of chemical reactions. The software is called Chemistry at Harvard Molecular Mechanics, CHARMM for short. Work began on CHARMM in the 1970s, and the software has had numerous developers and sponsors, including DOE. CHARMM is used on studies ranging from drug discovery to solar panels. Martin Karplus, a key force behind CHARMM, garnered a 2013 Nobel Prize in Chemistry with his colleagues Michael Levitt and Arieh Warshel.

B.R. Brooks, et al., "CHARMM: The biomolecular simulation program." Journal of Computational Chemistry 30(10), 1545-1614 (2009). [DOI: 10.1002/jcc.21287]. Subscription required: contact your local librarian for access. (Image credit: Claire Ballweg, DOE)

2009 - Decay Data and the Laws of the Universe

Since the early 1950s, scientists have been probing the Standard Model that describes the physical laws of our universe, such as magnetism. One way to learn more about our universe and the accuracy of the model is to study how certain atomic nuclei eject electrons via beta decay. The decay is caused by the weak force. The force is an integral part of the Standard Model. This landmark 2009 paper surveyed detailed measurements of 20 different superallowed beta decays. A superallowed decay means that there are more decays per unit time than expected. With these data, scientists tested our understanding of the weak force to unprecedented precision and set limits on the existence of new physics beyond the Standard Model.

J.C. Hardy and I.S. Towner, "Superallowed 0+ ---> 0+ nuclear beta decays: A new survey with precision tests of the conserved vector current hypothesis and the standard model." Physical Review C 79, 05502 (2009). [DOI: 10.1103/PhysRevC.79.055502]. Subscription required: contact your local librarian for access. (Image credit: public domain)

2009 - Things Are Different on the Inside

Scientists thought topological insulators were possible, but couldn't prove it. In this odd state of matter, a material's interior doesn't conduct electrons but the surface does. This landmark 2009 paper showed that a bismuth-and-tellurium-based material (Bi2Te3) was a topological insulator. At rates even better than predicted, this easy-to-make material conducted small amounts of electricity without loss at room temperature. This work sparked new ideas about how to make faster computer chips. Tools at the Advanced Light Source and Stanford Synchrotron Radiation Lightsource, both DOE scientific user facilities, made the work possible.

Y.L. Chen, J.G. Analytis, J.H. Chu, Z.K. Liu, S.K. Mo, X.L. Qi, H.J. Zhang, D.H. Lu, X. Dai, Z. Fang, S.C. Zhang, I.R. Fisher, Z. Hussain, and Z.X. Shen, "Experimental realization of a three-dimensional topological insulator, Bi2Te3." Science 325, 178-181 (2009). [DOI: 10.1126/science.1173034]. Subscription required: contact your local librarian for access. (Image credit: Yulin Chen and Z.X. Shen)

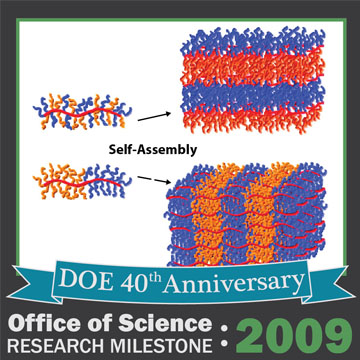

2009 - How to Make Dense, Complex Copolymers

Imagine trying to build a train track without the ties to hold it together. For scientists, that's what creating long, dense polymers was like — the individual pieces (called monomers) were in place, but no method existed to link and secure the structure. This landmark 2009 paper offered insights into a new blueprint to construct such well-organized materials, known as brush block copolymers. The key to success was a ruthenium-based catalyst. It efficiently connected the different monomers and allowed control of the polymer's structure simply based on the proportions of the building blocks and how they assembled. The results from this approach opened doors for the preparation of compact, long-chain polymers, which have potential uses in manipulating light.

Y. Xia, B.D. Olsen, J.A. Kornfield, and R.H. Grubbs, "Efficient synthesis of narrowly dispersed brush copolymers and study of their assemblies: The importance of side chain arrangement." Journal of the American Chemical Society 131, 18525-18532 (2009). [DOI: 10.1021/ja908379q]. Subscription required: contact your local librarian for access. (Image credit: Reprinted with permission, ©2009: American Chemical Society)

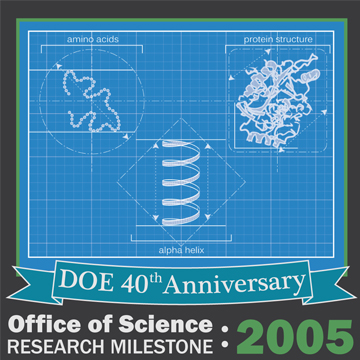

2005 - The Blueprints for the Cell's Protein Factory

At the beginning of this century, the chemical assembly line that cells use to form life's building blocks – proteins – wasn't well defined. Understanding that assembly line is key to combatting disease, by disabling the ribosomes in bacteria. This landmark 2005 paper, which used two DOE light sources, shed light on the assembly line. The scientists defined how a ribosome, the cell's protein factory, quickly connects amino acids to create proteins. Yale University professor Thomas Steitz received the 2009 Nobel Prize in Chemistry for his contributions to ribosomal research.

T.M. Schmeing, K.S. Huang, D.E. Kitchen, S.A. Strobel, and T.A. Steitz, "Structural insights in the roles of water and the 2' hydroxyl of the P site tRNA in the peptidyl transferase reaction." Molecular Cell 20, 437-448 (2005). [DOI: 10.1016/j.molcel.2005.09.006]. (Image credit: Protein by Emw (own work) [CC BY-SA 3.0 or GFDL], via Wikimedia Commons. Modified by Claire Ballweg, DOE)

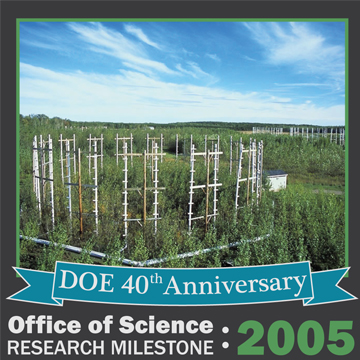

2005 - Higher Levels of Carbon Dioxide Led to Greater Tree Growth

How forests would respond to higher levels of carbon dioxide was one of the biggest uncertainties in early climate models. This landmark 2005 paper gave researchers far more confidence in those models by providing data from actual forests. Researchers examined how increasing levels of carbon dioxide in the air influence tree growth. Using a shared framework, they showed a 23 percent average increase in how much carbon trees took up at the levels of carbon dioxide estimated in 2050. In addition, it showed these results were consistent across forests with different structures and growth rates.

R.J. Norby, et al., "Forest response to elevated CO2 is conserved across a broad range of productivity." Proceedings of the National Academies of Sciences 102, 18052-18056 (2005). [DOI: 10.1073/pnas0509478102]. Subscription required: contact your local librarian for access. (Image credit: Oak Ridge National Laboratory)

2005 - Speeding Up Searches through Giant Datasets

Like trying to find a particular sentence in a thick book, finding exactly the right pieces of data in a vast collection can be extremely time-consuming. However, huge, complex datasets with numerous characteristics are far easier to search with a unique indexing technology called FastBit. This landmark 2005 paper described how researchers and programmers from DOE's Lawrence Berkeley National Laboratory developed the FastBit software. When it was first released in 2005, FastBit analyzed read-only scientific datasets 10 to 100 times faster than comparable commercial software. Originally designed to help particle physicists sort through billions of data records to find a few key pieces of information, FastBit has since been used to develop applications that analyze everything from combustion data to Yahoo!'s web content to financial data.

K. Wu, "FastBit: An efficient indexing technology for accelerating data-intensive science." Journal of Physics: Conference Series 16, 556 (2005). [DOI: 10.1088/1742-6596/16/1/077]. (Image credit: U.S. Department of Energy)

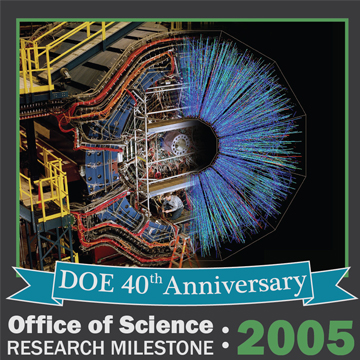

2005 - Free Quarks and Gluons Make the "Perfect Liquid"

Just after the Big Bang, quarks and gluons – the fundamental building blocks of atomic nuclei – were free and unrestricted. Only a few microseconds later, they started forming into the protons and neutrons that (with electrons) make up atoms today. While scientists theorized that this free "quark-gluon plasma" was a weakly interacting gas, results published in this landmark 2005 paper contradicted that idea. Scientists reported that when the Relativistic Heavy Ion Collider replicated these early conditions, the free quarks and gluons interacted strongly. They created a "perfect" liquid that flowed with almost zero resistance. In addition to revealing information about the universe's earliest moments, this discovery gave scientists insight into other forms of matter, including ordinary solids.

STAR Collaboration, J. Adams, et al., "Experimental and theoretical challenges in the search for quark gluon plasma: The STAR Collaboration's critical assessment of the evidence from RHIC collisions." Nuclear Physics A 757, 102 (2005). [DOI: 10.1016/j.nuclphysa.2005.03.085]. Subscription required: contact your local librarian for access.

PHENIX Collaboration, K. Adcox, et al., "Formation of dense partonic matter in relativistic nucleus-nucleus collisions at RHIC: Experimental evaluation by the PHENIX collaboration." Nuclear Physics A 757, 184-283 (2005). [DOI: 10.1016/j.nuclphysa.2005.03.086]. Subscription required: contact your local librarian for access. (Image credit: Brookhaven National Laboratory)

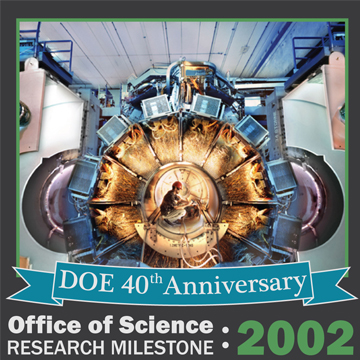

2002 - The Asymmetry Between Matter and Antimatter

Physics aims to determine the laws of the universe that everything should reliably follow. Except sometimes, things don't follow the rules. In charge-parity violation, particles and their antiparticles (same particle but opposite charge) don't act the same, even though the laws of physics in the early 1960s predicted that they should. While an experiment in 1964 showed the first evidence for charge-parity violation for a single type of particle, this landmark 2002 paper showed that charge-parity violation was more widespread by observing it in another particle, the B meson. This experimental observation paved the way for the 2008 Nobel Prize in Physics and played an important role in exploring why the early universe favored matter over antimatter.

B. Aubert, et al., "Measurement of the CP Asymmetry Amplitude sin2β with B0 mesons." Physical Review Letters 89, 201802-1-7 (2002). [DOI: 10.1103/PhysRevLett.89.201802]. Subscription required: contact your local librarian for access. (Image credit: Peter Ginter, SLAC)

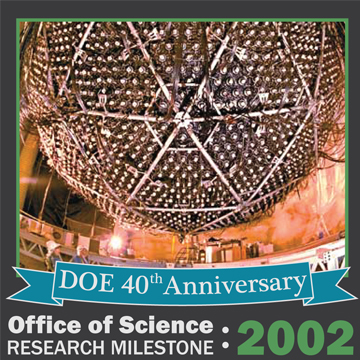

2002 - Discovery that Solar Neutrinos Change Type Shows Neutrinos Have Mass

Neutrino experiments in the 1960s detected fewer neutrinos from the sun than expected. Although originally thought to be massless, a theory proposed in 1985 suggested that if neutrinos had mass they could also change between three types as they travel. In 1998, the Super-Kamiokande experiment showed that neutrinos created in the atmosphere changed type as they traveled. This changing of neutrino types could explain the apparent deficit of solar neutrinos for detectors only sensitive to one of the three types. The Sudbury Neutrino Observatory was built to solve this problem by being sensitive to all neutrino types. This landmark 2002 paper, which contributed to the 2015 Nobel Prize in Physics, described the discovery that neutrinos produced by the sun change type as they travel and therefore must have mass. This process, called neutrino oscillation, continues to be studied and may provide insight into why matter dominates the universe today even though the Big Bang produced matter and antimatter in equal amounts.

Q.R. Ahmad, et al., "Direct evidence for neutrino flavor transformation from neutral-current interactions in the Sudbury Neutrino Observatory." Physical Review Letters 89, 011301 (2002). [DOI: 10.1103/PhysRevLett.89.011301]. Subscription required: contact your local librarian for access. (Image credit: Brookhaven National Laboratory)

2001 - Sequencing the Human Genome

One hundred years ago, lacking knowledge of our genetic code, the medical field simply didn't understand a major influence on health and illness. Today scientists regularly sequence DNA, leading to the ability to adapt medical treatments to individuals' genetics and analyze genomes of many other species. This 2001 blockbuster paper reported the initial sequencing of the vast majority (94 percent) of human genetic material (DNA). Because the Human Genome Project made the data available to the scientific community without restriction, it spurred a broad range of discoveries, creating an estimated $800 billion in economic impact. The Human Genome Project, conceived and initiated by DOE in 1985, has had far-reaching implications for energy, manufacturing, environmental and medical science, and the establishment of the scientific field of genomics.

E.S. Lander, et al., "Initial sequencing and analysis of the human genome." Nature 409, 860-921 (2001). [DOI: 10.1038/35057062]. Subscription required: contact your local librarian for access. (Image credit: Jonathan Bailey)

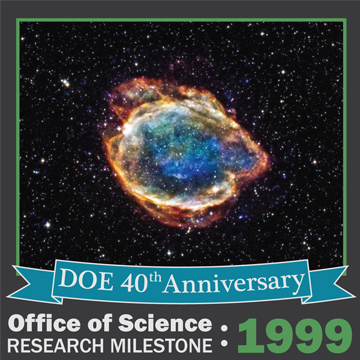

1999 - Supernovae Provide Evidence the Expansion of the Universe is Speeding Up

Until the dawn of the 21st century, scientists assumed the universe's expansion was slowing down as gravity pulled debris from the Big Bang back together. But now scientists know that the universe's expansion is speeding up, driven by an unknown cause referred to as "dark energy." This landmark 1999 paper reported measurements from Type la supernovae – which all reach the same maximum brightness when they explode – that indicated expansion is speeding up. These observations found that distant Type la supernovae were 25 percent less bright than they "should" be if the universe's expansion is slowing down. The discovery of the accelerating expansion of the universe was recognized with the 2011 Nobel Prize in Physics.

S. Perlmutter, et al., "Measurements of omega and lambda from 42 high-redshift supernovae." The Astrophysical Journal 517, 565-586 (1999). [DOI: 10.1086/307221]. (Image credit: NASA)

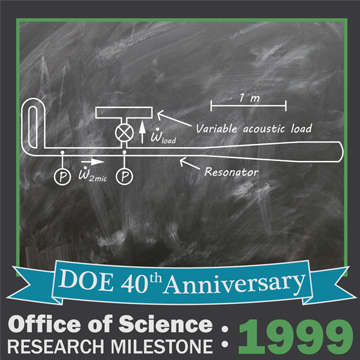

1999 - The Sounds and the Energy

In 1816, Scottish minister Robert Stirling devised a way to use sound to drive an engine. But the design was too expensive to produce at the time. Over the years, scientists reconsidered Stirling's ideas but with limited success. Enter Scott Backhaus, a postdoctoral fellow at DOE's Los Alamos National Laboratory, and engineer Greg Swift. In this landmark 1999 paper, they described a simple, energy-efficient engine with no moving parts. The engine used heat and compressed helium to produce intense sound waves, or acoustic energy. The acoustic energy can be used in certain types of refrigerators or to produce electricity. The device was more efficient than similar heat engines and was comparable to combustion engines. This work led to significant interest in using sound waves to produce energy.

S. Backhaus and G.W. Swift, "A thermoacoustic Stirling heat engine." Nature 399, 335-338 (1999). [DOI: 10.1038/20624]. (Image credit: Adapted (Claire Ballweg, DOE) by permission from Macmillan Publishers Ltd: Nature (see citation), ©1999)

1998 - Tracking Down the Ghosts of the Universe

When this paper was written in 1998, the Standard Model wasn't complete. Ghost-like neutrinos – particles that mostly pass through all matter, move at near light speed and have no charge – weren't behaving as scientists expected because fewer were seen from the sun than predicted. This landmark 1998 paper, which contributed to the 2015 Nobel Prize in Physics, answered a conundrum about neutrinos produced in Earth's atmosphere. Specifically, this result from the Super-Kamiokande experiment proved that the type of neutrinos produced in the atmosphere were changing into other types of neutrinos as they traveled. In doing so, the scientists also showed that neutrinos must have mass. This process of neutrinos changing type, called neutrino oscillation, provided a way to help understand the dramatic difference between the numbers of predicted and observed solar neutrinos. In 2002, the Sudbury Neutrino Observatory experiment provided the remaining pieces of the solar neutrino puzzle.

Y. Fukuda, et al., "Evidence for oscillation of atmospheric neutrinos." Physical Review Letters 81, 1562-1567 (1998). [DOI: 10.1103/PhysRevLett.81.1562]. (Image credit: Nathan Johnson, PNNL)

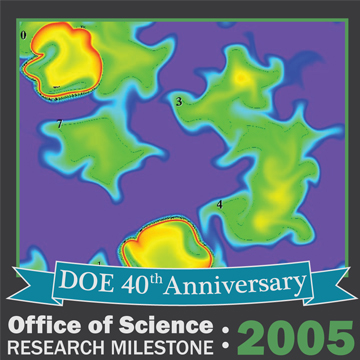

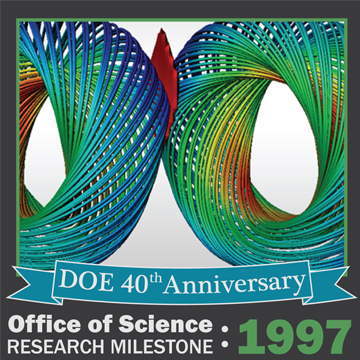

1997 - Math Wins the Heart of an Atom

For years, determining the interactions involving individual protons or neutrons required intense calculations based on a series of assumptions about the particles and fields involved. This landmark 1997 paper offered a more sophisticated approach that took advantage of increasing computational power to answer questions about the reactions and structures at the heart of an atom. The new computational approach, quantum Monte Carlo, allows researchers to see the splendor of the nuclear shell structure emanating directly from the interactions between protons and neutrons. This theoretical work and related advanced computer algorithms opened the doors for scientists using computer simulations to examine and predict the energy levels of some atomic nuclei.

B.S. Pudliner, V.R. Pandharipande, J. Carlson, S.C. Pieper, and R.B. Wiringa, "Quantum Monte Carlo calculations of nuclei with A<~7." Physical Review C 56, 1720 (1997). [DOI: 10.1103/PhysRevC.56.1720]. Subscription required: contact your local librarian for access. (Image credit: Argonne National Laboratory, CC BY-NC-SA 2.0)

1997 - Simplifying Speed

While supercomputers have split up the work and assigned it to different processors since 1982, coding these systems was time-consuming, error-prone, and required a high level of expertise. This landmark publication describes the PETSc 2.0 software, or the Portable, Extendable Toolkit for Scientific Computation. It made it easier to program networks using parallel processing, whether in supercomputers or conventional computers networked together. The toolkit brought together fundamental routines needed to run scientific models that use multiple unknown variables. Thousands of models have used the PETSc suite, including ones that describe air movement over airplane wings, water flowing across the Everglades.

S. Balay, W.D. Gropp, L.C. McInnes, and B.F. Smith, "Efficient management of parallelism in object-oriented numerical software libraries." Chapter 10 in E. Arge, A.M. Bruaset and H.P. Langtangen (Eds), Modern Software Tools in Scientific Computing, pp. 163-202 (1997). Contact your local librarian for access. (Image credit: National Energy Research Scientific Computing Center)

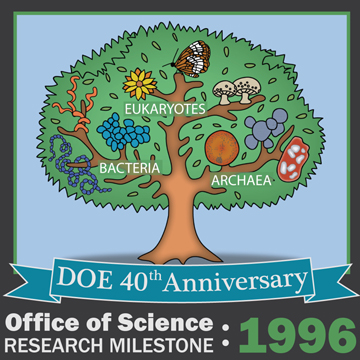

1996 - The Tree of Life Gets a New Branch

Classification schemes only work if everything fits in the scheme. For many years, biologists struggled because some single-celled organisms didn't fit with the known microbes called bacteria. This landmark paper described results of sequencing the genome of a heat-loving microbe Methanococcus jannaschii, originally isolated from vents on the ocean's floor. The genome sequencing results confirmed earlier ribosomal RNA-based classification schemes establishing Archaea as a distinct third branch of the Tree of Life. Prokaryotes are actually either Archaea or bacteria; the difference is in the genes. The genes and metabolic pathways in Archaea are sufficiently different from those found in bacteria and those found in complex, multi-celled organisms to warrant a separate classification. The paper was an important confirmation of the tripartite view of the Tree of Life.

C.J. Bult, et al., "Complete genome sequence of the methanogenic archaeon, Methanococcus jannaschii." Science 273, 1058-1073 (1996). [DOI: 10.1126/science.273.5278.1058]. Subscription required: contact your local librarian for access. (Image credit: Claire Ballweg, Department of Energy)

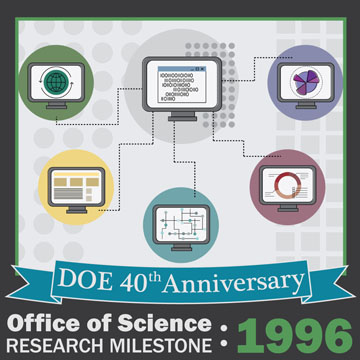

1996 - Message Passing Gets the Job Done on Supercomputers

In a most unusual case of writer's block, programmers could not commit to writing different versions of their codes if they could not move them from one machine to another. In the 1980's, computers used vendor-specific libraries, which let them write codes to take advantage of supercomputing's power. A group of computer scientists (many funded by DOE), computer vendors and the scientists who used the computers to solve tough problems worked together over several years to define the Message Passing Interface (MPI) standard, which created an interface between the code and the different libraries. This landmark 1996 paper described how MPICH, the first full implementation of the MPI standard, allowed programmers to develop software with confidence that it would run on both current and future supercomputers of every shape and size, including some of the world's fastest supercomputers. The software is freely available and both users and vendors have been quick to adopt it.

W. Gropp, E. Lusk, and A. Skjellum, "A high-performance, portable implementation of the MPI Message Passing Interface." Parallel Computing 22, 789-828 (1996). (Image credit: Claire Ballweg, Department of Energy)

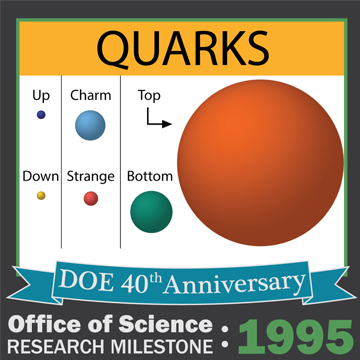

1995 - Climbing to the Top of Quarks

Since the 1977 discovery of the bottom quark, an elementary particle, scientists had been hunting for its partner particle, the top quark, as predicted by the Standard Model. Published 18 years after the search began, these landmark 1995 papers described the discovery of the top quark, which alone is massive enough to fall just short of gold on the Periodic Table. The top quark was discovered by the CDF and D0 experiments at DOE's Fermilab Tevatron Collider in the high-energy collisions of protons and antiprotons. Since the discovery, both the Fermilab Tevatron Collider and CERN Large Hadron Collider programs have measured the top quark's properties with increasing precision to test the Standard Model.

F. Abe, et al. (CDF Collaboration), "Observation of top quark production in pp collisions with the collider detector at Fermi lab." Physical Review Letters 74, 2626 (1995). [DOI: 10.1103/PhysRevLett.74.26.26]. Subscription required: contact your local librarian for access.

S. Abachi, et al. (D0 Collaboration), "Observation of the top quark." Physical Review Letters 74, 2632 (1995). [DOI: 10.1103/PhysRevLett.74.2632]. Subscription required: contact your local librarian for access. (Image credit: Claire Ballweg, Department of Energy)

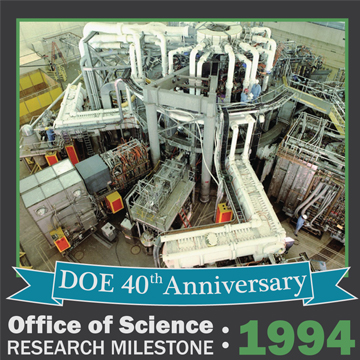

1994 - A Shift in the Likelihood of Fusion

A challenge with fueling a fusion reactor is reliably getting the fusion reaction to occur. In this landmark 1994 paper, scientists described a high-powered mixture of fusion fuels that allowed the Tokamak Fusion Test Reactor (TFTR) to shatter the world-record for fusion energy when it generated 6 million watts of power. This milestone was achieved using a 50/50 blend of two hydrogen isotopes: deuterium and tritium. The work was instrumental in building the scientific basis for ITER, a tokamak-based reactor to be fueled by deuterium and tritium and designed to test the viability of fusion as an energy source.

J.D. Strachan, et al., "Fusion power production from TFTR plasmas fueled with deuterium and tritium." Physical Review Letters 72, 3526 (1994). [DOI: 10.1103/PhysRevLett.72.3526]. Subscription required: contact your local librarian for access. (Image credit: Princeton Plasma Physics Laboratory)

1993 - Storing Data: From 1 Byte a Second to 1,000,000,000

In the 1990's, supercomputers stored information at the frustratingly slow rate of 1 to 10 million bytes a second. A byte is the space needed to store one character. Today, supercomputers can save a billion bytes a second – an innovation largely spurred by this landmark 1993 paper written by people at the DOE national labs and IBM. The paper described the High Performance Storage System (HPSS). The HPSS software coordinates among computers, hard disks and tape drives to store and manage access to extreme amounts of data. It can serve up data to multiple users simultaneously, reducing wait times involved in research and analysis. Further, the design of the software lets storage capacity and technology grow and change to meet the demands for increasingly detailed analysis on ever-larger supercomputers.

R.A. Coyne, H. Hulen, and R. Watson, "The High Performance Storage System." Supercomputing '93 Proceedings of the 1993 ACM/IEEE conference on Supercomputing 83-92 (1993). [DOI: 10.1145/169627.169662]. The paper is available via a free web account on ACM Digital Library. (Image credit: Pacific Northwest National Laboratory)

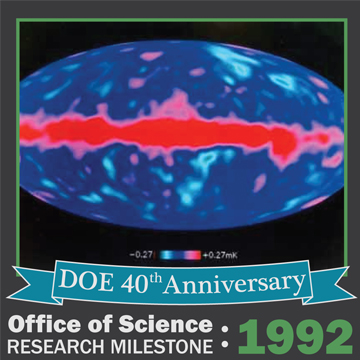

1992 - The Big Bang Becomes a Household Phrase

While the Big Bang often discussed in science classes today was first theorized in the 1940s, it wasn't actually confirmed until later. This landmark 1992 paper presented the discovery and mapping of tiny temperature fluctuations in the cosmic microwave background by the NASA-led COBE mission, where DOE researchers led one of the primary instrument teams. These minuscule changes, tiny ripples in space-time fabric, were the seeds of stars and planets. The finding – which Stephen Hawking called the "discovery of the century, if not all time" – pushed the Big Bang into the common vocabulary and garnered the 2006 Nobel Prize in Physics.

G.F. Smoot, C.L. Bennett, A. Kogut, E.L. Wright, J. Aymon, N.W. Boggess, E.S. Cheng, S. Gulkis, and M.G. Hauser, "Structure in the COBE differential microwave radiometer first-year maps." The Astrophysical Journal 396, L1-L-5 (1992). [DOI: 10.1086/186504]. (Image credit: Berkeley Lab)

1990 - Smooth Sailing for Plasmas Solves Issues in Fusion Science

Creating conditions for nuclear fusion in the laboratory requires the calming of turbulence generated by scorching hot plasmas. Rapid spatial changes (known as shear) in the plasma density and temperature drive turbulent eddies that carry heat and particles outside of the plasma. In this landmark 1990 paper, Richard Groebner and his team at the DIII-D National Fusion Facility realized that turbulence could be interrupted by a sheared flow of plasma. The sheared flow reduces the size of turbulent eddies and allows the plasma to reach higher overall temperatures. This turbulence-reducing process is one that will allow the ITER tokamak to demonstrate net fusion power generation.

R.J. Groebner, K.H. Burrell, and R.P. Seraydarian, "Role of edge electric field and poloidal rotation in the L-H transition." Physical Review Letters 64, 3015 (1990). [DOI: 10.11.03/PhysRevLett.64.3015]. Subscription required: contact your local librarian for access. (Image credit: Solar Dynamics Observatory/NASA)

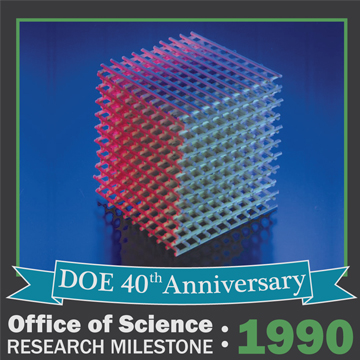

1990 - The Median on Light's Highway

Just as a highway median separates and controls traffic, materials that control light also use a median that researchers call a band gap. Scientists thought it would be easy to build orderly three-dimensional structures with glass-like spheres to create materials with band gaps. They built and tested numerous options, but sorting through the many permutations, such as the size of spheres, to find band gaps was like finding a needle in a very large haystack. This landmark 1990 paper took the problem off the laboratory bench by calculating the right arrangement of spheres to create a band gap. They found that to get a band gap they needed to put these spheres in a four-sided, tetrahedral structure, reminiscent of diamonds. A short time later, others verified that this structure controls light as calculated. The paper is cited as an early starting point in the creation of materials that can control light.

K.M. Ho, C.T. Chan, and C.M. Soukoulis, "Existence of a photonic gap in periodic dielectric structures." Physical Review Letters 65, 3152-3155 (1990). Subscription required: contact your local librarian for access. (Image credit: Ames Laboratory)

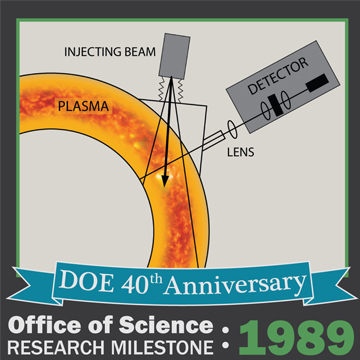

1989 - Measuring Plasma Current in a Tokamak

The fourth state of matter (a plasma, a hot ionizing gas) can be used to produce vast amounts of electricity, but first it must be controlled. In the 1950s, scientists used helical magnetic fields to bottle a plasma in a configuration known as a tokamak that insulated it from the surrounding walls. But they needed a way to discern how induced electrical currents in the plasma modify the magnetic field that guides the plasma and protects the surrounding walls. A landmark 1989 paper explained how to measure the magnetic field by interpreting visible light emitted by atoms injected into the plasma using accelerated hydrogen beams. Today, these measurements allow scientists to precisely tailor the magnetic field to improve plasma confinement and maximize fusion performance.

F.M. Levinton, R.J. Fonck, G.M. Gammel, R. Kaita, H.W. Kugel, E.T. Powell, and D.W. Roberts, "Magnetic field pitch-angle measurements in the PBX-M tokamak using the motional Stark effect." Physical Review Letters 63, 2060 (1989). [DOI: 10.1103/PhysRevLett.63.2060]. Subscription required: contact your local librarian for access. (Image credit: Claire Ballweg, DOE)

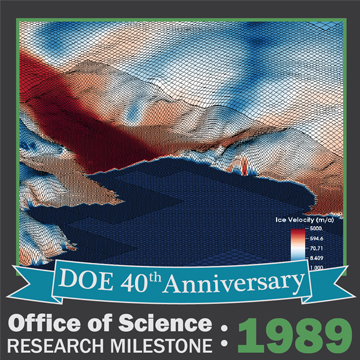

1989 - Details Where You Want Them

If you're modeling an airplane wing, you want to focus your supercomputer on the details that matter. That is, you want to efficiently obtain the details on the most interesting parts of the problem. The landmark 1989 paper described a solution to the problem: the adaptive mesh refinement (AMR). The AMR resulted in a much more detailed understanding of what was happening by focusing on the areas of interest. AMR is used worldwide and in a wide array of applications, from airplane engines to ice sheets.

M.J. Berger and P. Colella, "Local adaptive mesh refinement for shock hydrodynamics." Journal of Computational Physics 82(1), 64-84 (1989). [DOI: 10.1016/0021-9991(89)90035-1]. Contact your local librarian for access. (Image credit: Dan Martin, Lawrence Berkeley National Laboratory)

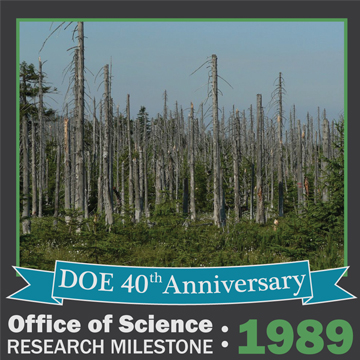

1989 - Acid Rain and the Nation

How the "acid" got into "acid rain" - and snow, fog, hail and dust containing acidic components fell far from the source wasn't known until this 1989 landmark paper. Stephen Schwartz at DOE's Brookhaven National Laboratory identified the underlying causes, processes and impacts of this precipitation. He also explained how sulfur and nitrogen oxides released at one source caused acid rain in other environments. Schwartz's findings gave the first clear picture of the nationwide impacts of different sulfur and nitrogen sources and led to changes to the Clean Air Act.

S.E. Schwartz, "Acid deposition: Unraveling a regional phenomenon." Science 243, 753-763 (1989). [DOI: 10.1126/science.243.4892.753]. Subscription required: contact your local librarian for access. (Photo credit: public domain)

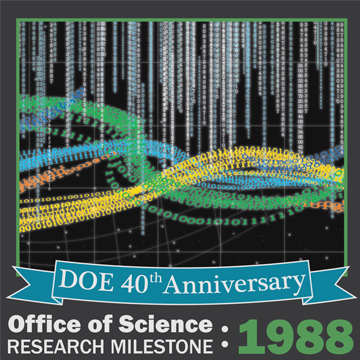

1988 - Teaching Protocol to Computers

1988 - Teaching Protocol to Computers

In October 1986, the "information highway" had the first of what was to be a series of traffic jams. These crashes stopped people from retrieving email, accessing data or viewing websites. How could these collapses be avoided? This landmark 1988 paper described and analyzed five algorithms that shed light on the flow of data packets. In addition, the paper detailed how to tune the Berkeley Unix Transmission Control Protocol, which enabled systems to connect and exchange data streams under abysmal network conditions. This paper laid out the foundations for controlling and avoiding congestion in the Internet.

V. Jacobson, "Congestion avoidance and control." ACM SIGCOMM'88 18(4), 314-319 (1988). [DOI: 10.1145/52325.52356]. The paper is available via a free web account on ACM Digital Library. (Image credit: Claire Ballweg, DOE)

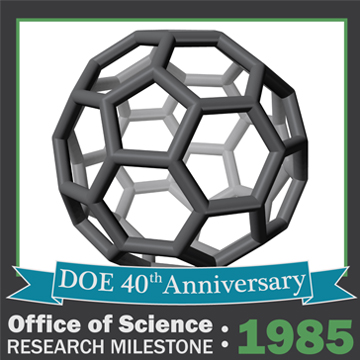

1985 - Of Soccer Balls and Carbon

Carbon is widely used in nature and industry because of its ability to bond with other elements. In fact, in the early 1980s, scientists didn't even know there was a form of carbon that did not bond to other elements. This landmark 1985 paper identified a new form - 60 atoms arranged to resemble a soccer ball - that bonded only to itself. Richard Smalley and his colleagues named it after R. Buckminster Fuller, whose geodesic domes provided a clue as to the structure. Discovering buckminsterfullerene, buckyballs for short, led to the discovery of other novel carbon structures. These structures, called fullerenes and used in biomedical applications, garnered a Nobel Prize in 1996 for Robert Curl Jr., Harold Kroto and Richard Smalley.

H.W. Kroto, J.R. Heath, S.C. O'Brien, R.F. Curl, and R.E. Smalley, "C-60: Buckminsterfullerene." Nature 318(6042), 162-163 (1985). [DOI: 10.1038/318162a0]. Subscription required: contact your local librarian to access. (Image credit: public domain)

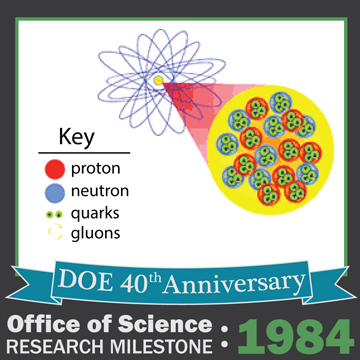

1984 - The Nature of Quarks in Protons

As a good chef will tell you, if you don't understand the ingredients, you can't control the menu. Scientists knew by 1984 that the protons and neutrons at the center of the atom were "made" of quarks, but they lacked the pivotal insights to understand how this internal structure of protons and neutrons changes when many of them are very close to each other in an atomic nucleus. In this landmark 1984 paper, scientists presented the results of blasting nuclei of different atoms with beams of electrons at DOE's Stanford Linear Accelerator Center. The results showed that the quark structure of the individual protons and neutrons embedded in a nucleus doesn't match the quark structure of free or individual protons and neutrons. This work, which began with a mere 80 hours of beam time at SLAC, highlighted the importance of considering the quark structure of protons, neutrons and nuclei in nuclear physics and went on to motivate the physicists who came to build DOE's Jefferson Laboratory.

R.G. Arnold, P.E. Bosted, C.C. Chang, J. Gomez, A.T. Katramatou, G.G. Petratos, A.A. Rahbar, S.E. Rock, A.F. Sill, Z.M. Szalata, A. Bodek, N. Giokaris, D.J. Sherden, B.A. Mecking, and R.M. Lombard, "Measurements of the A dependence of deep-inelastic electron scattering from nuclei." Physical Review Letters 52, 727 (1984). [DOI: 10.1103/PhysRevLett.52.727]. Subscription required: contact your local librarian for access. (Image credit: Brookhaven National Laboratory)

1978 - Confining a Tokamak's Plasma

To confine the fourth state of matter (a plasma, or hot ionized gas), the surrounding walls must be insulated. In donut-shaped tokamaks, electrical currents in the plasma are used to confine the plasma within a helical magnetic field. Early methods to sustain the electrical current meant the plasmas could only be confined for a short time. In a landmark 1978 paper, Nathaniel "Nat" Fisch from the Massachusetts Institute of Technology proposed a current-sustaining technique that would allow continuous, steady-state operation to be realized. The approach is used routinely in present-day test reactors.

N.J. Fisch, "Confining a tokamak plasma with rf-driven currents." Physical Review Letters 41(13), 873 (1978). [DOI: 10.1103/PhysRevLett.41.873]. Subscription required: contact your local librarian for access. (Photo credit: Ron Levenson, MIT, CC BY 3.0)

1977 - Getting to the Bottom...of Quarks

Quarks are building blocks for matter. By the early 1970s, four types of quarks have been discovered. Calculations predicted there were more types of quarks, but scientists couldn't prove it. In 1977, Leon Lederman led a team from Fermilab, Columbia University, and Stony Brook University in discovering a new elementary particle - the bottom quark along with its antiquark. The bottom (or b) quark, which some call the beauty quark, was discovered at Fermilab, soon confirmed by experiments in Europe, and is now a frequent decay product at the Large Hadron Collider at CERN. Located in Switzerland, CERN, or the European Organization for Nuclear Research, is where physicists and engineers use massive, complex instruments to study quarks and other elementary particles.

S.W. Herb, D.C. Hom, L.M. Lederman, J.C. Sens, H.D. Snyder, J.K. Yoh, J.A. Appel, B.C. Brown, C.N. Brown, W.R. Innes, K. Ueno, T. Yamanouchi, A.S. Ito, H. Jӧstlein, D.M. Kaplan, and R.D. Kephart, "Observation of a dimuon resonance at 9.5 GeV in 400-GeV proton-nucleus collisions." Physical Review Letters 39(5), 252-255 (1977). Subscription required: contact your local librarian for access. (Photo credit: Fermilab)

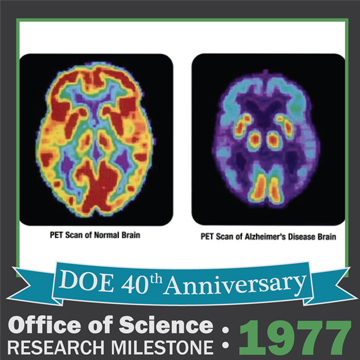

1977 - Seeing with Sugar

Nuclear medicine owes its existence to work that grew out of DOE and its predecessor research programs. This landmark 1977 paper described how to make 2-18fluoro-D-glucose (18FDG). This sugary compound, developed at DOE's Brookhaven National Laboratory, is a radiotracer. It contains a short-lived radioactive isotope of fluorine and a sugary glucose backbone. If you've ever had a PET scan, you've probably encountered this radiotracer. To prepare for a scan, you're injected with this compound; your cells take it up. Specialized instruments detect the fluorine decay and provide detailed images of your brain, heart and other organs. These PET scans are a new standard medical diagnostic procedure for brain disorders, heart disease and cancer.

T. Ido, C.N. Wan, J.S. Fowler, and A.P. Wolf, "Fluorination with molecular fluorine. A convenient synthesis of 2-deoxy-2-fluoro-D-glucose." Journal of Organic Chemistry 42, 2341-2342 (1977). [DOI: 10.1021/jo00433a037]. Subscription required: contact your local librarian for access. (Photo credit: public domain)